If you’ve ever suspected there was something baleful about our deep trust in data, but lacked the mathematical skills to figure out exactly what it was, this is the book for you: Cathy O’Neil’s “Weapons of Math Destruction” examines college admissions, criminal justice, hiring, getting credit, and other major categories. The book demonstrates how the biases written into algorithms distort society and people’s lives.

But the book, subtitled “How Big Data Increases Inequality and Threatens Democracy,” is also a personal story of someone who fell for math and data at an early age, but became harshly disillusioned. As she looked more deeply, she came to see how unjust and unregulated the formulas that govern our lives really are. Though the book mostly concerns algorithms and models, it’s rarely dry.

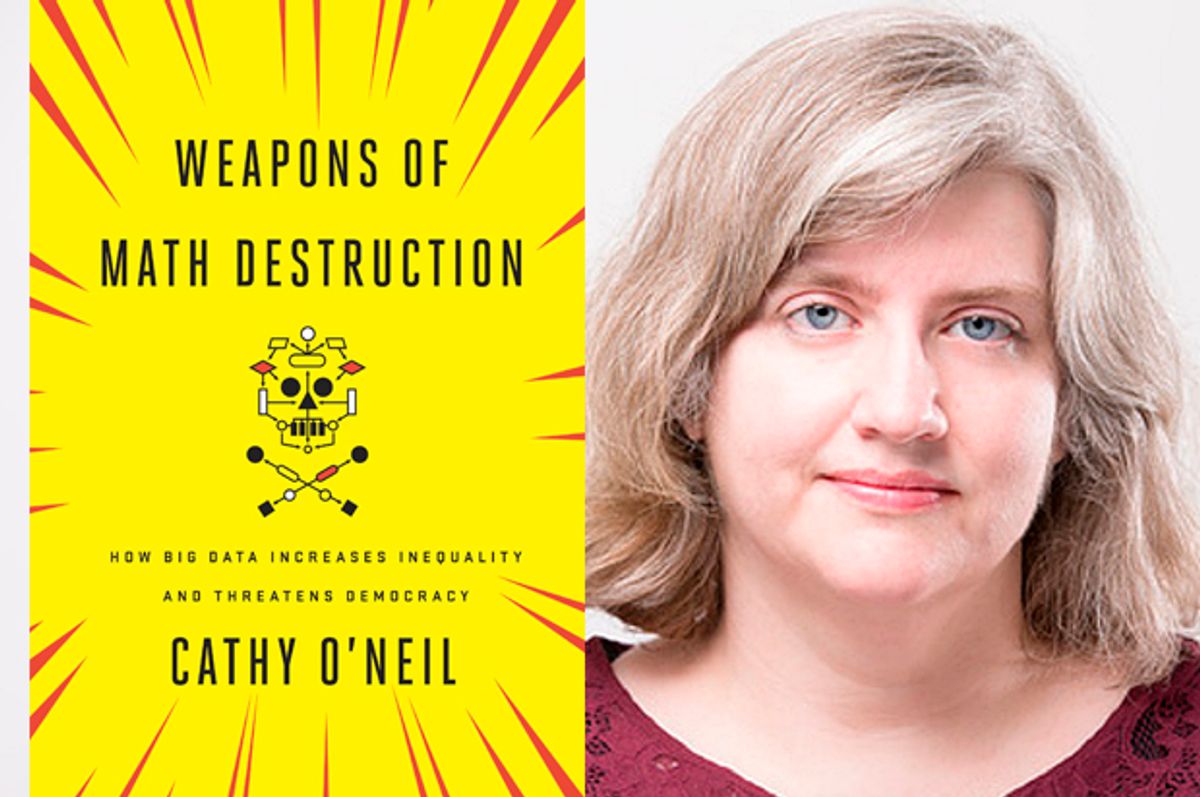

We spoke to O’Neil, a data scientist, blogger, and former Wall Street quant, from New York City. The interview has been lightly edited for clarity.

So let's start from the beginning. You were attracted to math as a kid, and you later became a quant. What drew you to to data, and what did seem to promise when you were first becoming fascinated by it?

Well, I was drawn to mathematics because it seemed to me so clean, so true. So honest, like people who are examining their assumptions are being extremely careful with their reasoning. It’s purely logical. And I had this extremely idealistic naive approach to leaving academic math and going into finance. I had this idea that we could bring this kind of true, pure logic into the real world. And I entered in 2007, just in the nick of time to get a front-row seat to the financial crisis.

And what caused the financial crisis — at a fundamental level, it relies on mathematics in the form of those AAA ratings on mortgage-backed securities which were trusted by the international community essentially because they were represented by mathematics.

Right. They seemed without bias and unimpeachable, objective because they were quantified, right?

Right. Because they were supposed to be representing a fair, objective measure of the risk inherent in the securities based on historical data and complicated, sophisticated mathematics.

Can you give us some other examples of the real-world stakes of what you write about in your book?

I left finance disgusted, and wanted to find a refuge to do my thing… In a morally positive way. And I found myself as a data scientist in the start-up world of New York City… Hoping to feel better about myself.

What I found was that the stuff I was doing was actually, in its own way, also a mathematical lie, a mathematical deception.

It wasn't the stuff I was working on, but in the very worst manifestation it was actually kind of a weaponized mathematical algorithm. I was working in online advertising. Most of the people working online advertising represented it as a way of giving people opportunities. That's true for most technologists, most educated people, most white people.

On the other side of the spectrum you have poor people, who are being preyed upon, by the same kinds algorithms. They’re being targeted by payday lenders or for-profit colleges. If you think about how Google auction works, the people that can get the most value out of poor people are the people that can squeeze them for the most money. Or for the most federal aid, in the case of for-profit colleges.

So I mean basically, I left finance to try to feel better about myself and then I ended up saying, “Oh my God, I'm, I’m part of the problem yet again.”

Right and part of what's interesting about your book is that it looks at the kind of impact that data exerts, that it works on our lives at almost all the critical levels at sort of the big life-changing moments. Can you give us some examples of when it ends up shaping, limiting what we can do?

Yeah, so once I started realizing I was yet again in the soup, I really started looking around… One of my best friends was a high school principal. Her teachers were getting scored by this very opaque high stakes scoring system called the Value Added Model for teachers. I kept on urging her to send me the details. How did they get scored? She was like, “Oh I keep on asking… But everyone keeps on telling me I wouldn't understand it 'cause it’s only math.”

And this is exactly the most offensive thing to be for me to hear, right? Because here I am trying to think of mathematics as, you know, trying to be a sort of Ambassador of Mathematics, like mathematics is here to clarify. But instead it's literally being used as a weapon.

And it’s just beating the heads of these teachers who are just getting these scores. They’re not getting advice like here's how you can improve your score, nothing.

It’s just like here's your score and this is your final say on whether you're a good teacher or not. I was like, This is quite frankly not mathematical. And I looked into it.

But it's opaque right? Which is also what a lot of these things have in common.

It’s opaque, and it’s unaccountable. You cannot appeal it because it is opaque. Not only is it opaque, but I actually filed a Freedom of Information Act request to get the source code. And I was told I couldn't get the source code and not only that, but I was told the reason why was that New York City had signed a contract with this place called VARK in Madison, Wisconsin. Which was an agreement that they wouldn't get access to the source code either. The Department of Education, the city of New York City but nobody in the city, in other words, could truly explain the scores of the teachers.

It was like an alien had come down to earth and said, “ Here are some scores, we’re not gonna explain them to you, but you should trust them. And by the way you can't appeal them and you will not be given explanations for how to get better.”

Yeah, it almost sounds like a primitive kind of religion: You know, the gods have decided this is the way it's going to go — mortals can only worship these divine verdicts.

Absolutely and it’s a really good way of saying it. It’s like you're being put into a cult, but you don't actually believe in it.

So once I found out it was being used for teachers, I was asking, Where else is this being used…

And I looked around, and it goes by many, many names. But it is everywhere, so we have it in lending, we have it insurance, we have it in job-seeking. For minimum wage jobs, especially, personality tests. But for white-collar jobs you have more and more resume algorithms that sort resumes before any human eyes actually see them.

You have algorithms that are the consequences of surveillance; they take surveillance at work mostly. Especially for people like truckers who used to have a lot of independence, but now are completely surveilled. And then they use all their data to create algorithms.

It is not going to get better just with better data… Because these things are being done in situations where people do not have leverage, they don't have the ability to say “no,” like you can theoretically opt out of something online.

You cannot opt out of answering questions when you're getting a job.

These models decide what people to police or who to put into jail longer. It's additive: A person is going to be measured up by these algorithms, in multiple ways over their lifetime and at multiple moments. The winners are going to win and the losers are going to lose and the winners are not even going to see the path of the loser. It has an air of inevitability to it. Because we don't see it. We don't see it happening. It's not like a public declaration, here’s how we figured this out and here's who won and here’s who lost. It’s often secret.

It's subtle. It happens different times for different people, so in some ways I realized that the failure of this system was a very different kind of failure than what we had seen in the financial crisis.

When the financial crisis happened, everyone noticed. People panicked, it was very loud. This, this is the opposite. This is like a quite individual level sort of degradation. Of civil society.

And because of that there's been no public debate about it. A lot of people don't even know its happening; don't even have a language with which to discuss it.

Absolutely and so that's really why I wrote the book. I got to that point where I was like, “We need to know this.” Because we're not going to figure this out just by doing our thing, especially technologists.

At this point I should say I think it's going to get worse before it gets better.

Your book looks at how unjust this all is at the level of education, of voting, of finance, of housing. You conclude by saying that the data isn't going away, and computers are not going to disappear either. There are not many examples of societies that unplugged or dialed back technologically. So what are you hoping can happen? What do we need to do as a society to, to make this more just, and less unfair and invisible?

Great point, because we now have algorithms that can retroactively infer people’s sexual identity based on their Facebook likes from, you know, 2005. We didn't have it in 2005. So imagine the kind of data exhaust that we're generating now could likely display weird health risks. The technology might not be here now but it might be here in five years.

The very first answer is that people need to stop trusting mathematics and they need to stop trusting black box algorithms. They need to start thinking to themselves. You know: Who owns this algorithm? What is their goal and is it aligned with mine? If they’re trying to profit off of me, probably the answer is no.

And then they should be able to demand some kind of consumer, or whatever, Bill of Rights for algorithms.

And that would be: Let me see my score, let me look at the data going into that score, let me contest incorrect data. Let me contest unfair data. You shouldn’t be able to use this data against me just because — going back to the criminal justice system — just because I was born in a high crime neighborhood doesn't mean I should go to jail longer.

We have examples of rules like this . . . anti-discrimination laws, to various kinds of data privacy laws. They were written, typically, in the '70s. They need to be updated. And expanded for the age of big data.

And then, so finally I want data scientists themselves to stop hiding behind this facade of objectivity. It's just … it’s over. The game, the game is up.

There was a recent CBC documentary on Google search and it was maddening to me to hear Google engineers talking again and again about how, “There's no bias here because we're just answering questions that people are asking,” you know.

The CBC interviewer kept on saying, “Yes, but you're deciding which is first and which is second, isn't that an editorial decision?” You know, yes it is. We need to stop pretending what we're doing isn't important and influential and we need to take a kind of Hippocratic Oath of modeling where we acknowledge the power we have and our ethical responsibilities.

Shares