When pollsters, journalists, and pundits want to signal that an opinion poll may not be right on target, they often turn to a familiar metric: the margin of error. The figure — usually rendered as something like "+/- 3 percentage points" — is generally, but not entirely accurately, understood to suggest that, for example, if 29 percent of Americans say they favor chocolate ice cream over vanilla, the true number might be as low 26 percent, or as high as 32 percent. The AP Stylebook, which sets standards widely used by U.S. media organizations, instructs journalists to include the figure in any coverage of polling results.

But some polling experts question whether margin of error is actually a useful metric. The problem, critics charge, is that few people understand what "margin of error" really means — and that, taken alone, the specific statistical error captured in the metric offers an incomplete and even misleading way to convey uncertainty. "As pollsters, all we have right now, and all we give people, is the margin of error," said Courtney Kennedy, the director of survey research at the Pew Research Center, describing it as a "fatally flawed metric."

Joshua Clinton, a political scientist at Vanderbilt University and the chair of a major polling-field taskforce evaluating 2020 election poll performance, agreed. "The margin of error is such a misleading and horrible term," he said. "I wish we'd just banish it from our vernacular."

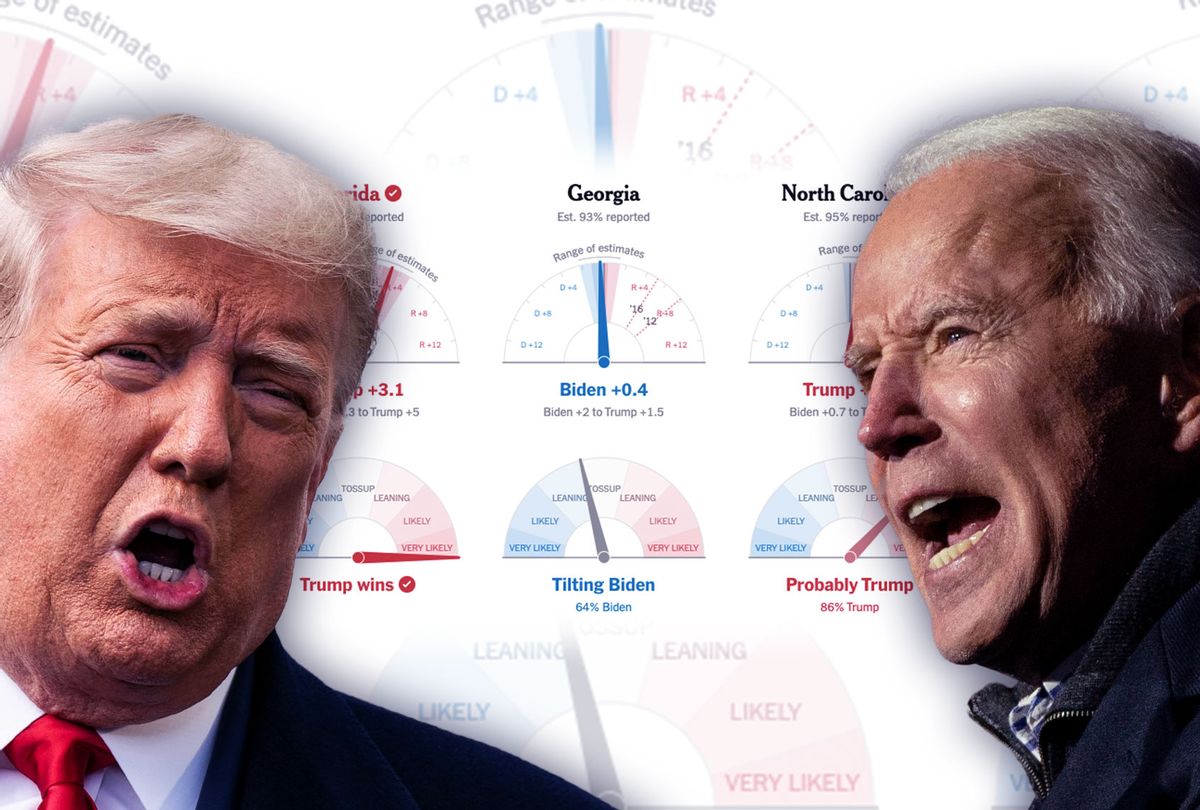

Those concerns have taken on new urgency after the 2020 election. Major polls in key swing states underestimated President Donald J. Trump's eventual share of the votes and overstated the chances for several Democratic Senate candidates. Coming after similar polling lapses in the 2016 election, the polling performance has elicited public backlash and mockery from prominent Republicans, as well as unfounded accusations of pollster fraud, including from the president.

The shortfall has led some pollsters to reevaluate their methods, even as they characterize some criticisms as unfair. But it has also led to frustration from some polling experts, who say the public often fails to grasp the intrinsic fuzziness of even the best polls and election forecasts. "I'm just honestly not sure how much more loudly or more repetitively I can scream about uncertainty and the potential for error," HuffPost polling editor Ariel Edwards-Levy wrote on Twitter a week after the election.

Margins of error may seem like a natural place to make changes — and, indeed, the 2016 election postmortem report from the American Association for Public Opinion Research (AAPOR), led by Kennedy, suggested reevaluating the way the metric is reported and explained. Experts say that replacing the margin of error, though, may be challenging. And the persistence of the metric, despite its limitations, points to the mixed incentives that pollsters face as they try to convey uncertainty while competing for attention in a crowded market.

Opinion polling depends on a simple statistical principle: the law of large numbers. If you take, say, a tub of one million blue marbles and one million red marbles and start pulling them out at random, the first 10 probably won't yield an even 50-50 split. As that sample gets larger — 100 randomly selected marbles, or 500 — the red-blue proportion will move closer to the true composition of the giant marble tub. But, even then, there will often be a little swing: 240 blue and 260 red, for example, instead of an even 250 for each side.

The margin of error is a way of calculating how big that swing is likely to be. The larger the sample, the smaller the error margin. If the marble sample shows that 49 percent of the marbles are red, with a margin of error of plus or minus three percentage points, that means that if someone ran the survey again many times, most of the outcomes — usually 95 percent —would fall somewhere within 3 percentage points of 49 percent.

Opinion polling, though, is not pulling marbles from a giant tub. Every marble summoned to represent the whole, after all, shows up. A typical opinion survey, by contrast, is a chancier affair, with phone calls sometimes going out to thousands of people in the hope that a few hundred will pick up the phone and make time to tell a total stranger, or sometimes just an automated voice, about their opinions. (Pew's survey researchers, for example, say that they reach less than 10 percent of the people they call.)

For election polls, the people who are willing to respond to pollsters are not necessarily representative of the people who show up to vote. Sometimes even careful interviewers can unconsciously influence or nudge answers, too, inadvertently muddying the results. It's also likely that at least some subset of people who do respond to surveys will have misunderstood the questions. Others — hopefully a small number, but almost certainly more than zero — will mislead with their answers.

Contrary to what many ordinary readers — and even many journalists — might believe, none of this additional fuzziness gets factored into the cold math of the margin-of-error calculation. "The problem is the margin of error ignores all those other error sources, and it suggests to the audience that they just don't exist," said Kennedy. "And so, when you tell people, 'It's this percentage plus or minus three,' that is just factually untrue."

That nuance does not necessarily come across in coverage of polls. Even poll-savvy people sometimes misrepresent the margin: After the 2016 election, one political scientist wrote an article about polling lapses for The Conversation, a portal for writing by academics, explaining that "the margin of error simply means how accurate the poll is."

Speaking in general about the margin of error, Clinton said the opposite: "It's not a statement of how accurate the poll is," he said.

Indeed, final election results often fall well outside polls' margins of error. Before the 2016 election, a group of researchers analyzed more than 4,000 election polls. Their results, eventually published in the Journal of the American Statistical Association, showed that the "average survey error" for those polls was "about twice as large as that implied by most reported margins of error."

"I tell my students, every time you see a margin of error, at least double it," said Clinton.

More recently, Don Moore, a professor at the Haas School of Business at the University of California, Berkeley, and a student analyzed 1,400 polls from presidential primaries and general elections. He said their analysis, which has not been peer reviewed, found that "a week before the election, a poll's margin of error includes the actual election result about 60 percent of the time."

These findings are not breaking news to pollsters. For example, in a 1988 report, a veteran pollster, Irving Crespi, analyzed 430 election polls from the 1980s. On average, the polls were nearly 6 percentage points off — an error, Crespi wrote, that was "roughly three to four times as large as what would be expected through chance alone."

Harris Insights and Analytics, a large market and political research firm that conducts the Harris Poll, has long avoided use of the term "margin of error" in its surveys — expressly because it is misunderstood by the public, and fails to capture they myriad potential sources of error in any analysis. Margins of error arguably "confuse more people than they enlighten," the firm declared in a 2007 press release, "and they suggest a level of accuracy that no statistician could justify."

* * *

It's not clear what an alternative metric to margin of error would be. "Quantifying all this other error is not that simple," said Brian Schaffner, a political scientist at Tufts University and academic survey researcher. "It's not easy to put into a single number," he continued, "and journalists are probably not going to want to spend two paragraphs of a story about a poll talking about all the different measures of uncertainty that we should be thinking about in this poll."

One alternative, Schaffner suggested, would be for the industry to adapt the rule of thumb used by Clinton and others, and just consistently report a doubled margin of error. But, he cautioned, that's just "a shorthand rule," and not a precise measure. Another approach, some experts say, could be to use the historical performance of polls to produce metrics that estimate how likely a poll is to miss the mark.

Implementing such changes, though, could face several obstacles. For one thing, there's no single body that controls how polls are reported. While AAPOR is influential, it only issues recommendations, and not all polling firms or media outlets follow them.

Pollsters may also have incentives to overstate the precision of their results. "If everyone else is saying their polls are really precise, do you want to be the first one who comes out and says, 'And here's my poll result, and it's plus or minus 30'?" said Clinton. "If everybody else is using margin of error, then you're basically saying that your poll is not as good as the other polls, in the eyes of people who may not fully appreciate all that."

Charles Franklin, a political scientist who runs the prominent Marquette Law School Poll, has experienced that dynamic firsthand. Franklin began running the Marquette poll in 2012, after more than two decades doing academic survey research. He said he thought his background in statistics and academia would give him "the ability to convey more about margin of error and uncertainty than I thought public polling typically did." As part of that effort, he decided to be more transparent about a little-appreciated quirk in margin of error calculations.

That quirk works something like this: Take an imaginary poll that suggests that Joe Biden is leading Donald Trump by 5 percentage points: Biden is favored by 50 percent of the sample, and Trump by 45 percent. If the poll has a margin of error of plus or minus 3 percentage points, that represents a 3-point margin around Biden's total, and a 3-point margin around Trump's total. But the 5 percentage-point gap between the two has its own margin of error — and that margin will be much larger than 3 points. That's because it captures the potential of oversampling one candidate while undersampling the other. As Pew explains it: "If the Republican share is too high by chance, it follows that the Democratic share is likely too low, and vice versa." As a result, the margin of error for the candidate's lead is roughly double the reported margin of error of the poll – in this example, 6 percentage points, rather than 3.

Put simply, if a journalist describes the poll above as having "Biden ahead by five points," the lead might be another six points in either direction, just from chance variation – and that's before accounting for other sources of error that can affect survey results.

This is "survey statistics 101," said Franklin. "So I naively wrote that up in my very first press release," he recalled, "only to have The Washington Post characterize our results as having 'an unusually large margin of error.'" The poll looked bad, and Franklin felt burned.

"I quickly came to the conclusion that I could only complicate and confuse things by insisting in our press releases in reporting that correct margin of error for a difference between two percentages," Franklin said. "And I kind of despair about that, because you want to be technically correct."

* * *

Communicating uncertainty, of course, is something that has long dogged not just pollsters, but scientists writ large. Many climate scientists, epidemiologists, and meteorologists are tasked with juggling uncertain variables in order to understand and, as best they can, predict how complex systems will behave given certain inputs. Instead of clear-cut answers, those models yield ranges of outcomes, often rendered as probabilities. In the case of climate models and epidemiological models, those results, despite the uncertainty, can have serious political implications.

The reading public has, for the most part, learned to accept probabilities, and the underlying uncertainties, when it comes to, say, the weather. Few people are surprised or upset when a 25 percent chance of rain ends up delivering showers. But on other fronts — particularly those freighted with political baggage — many people respond to uncertainty with confusion, disappointment, and even anger.

In a conversation the week after the election, Franklin said he was feeling vexed over how to communicate uncertainty. He acknowledged that margin of error is an imperfect metric, and he's interested in alternatives. But, he said, margin of error is a more straightforward, transparent calculation than whatever metric would likely replace it. And, Franklin said, an excess of precision can actually detract from the work of pollsters. "We've got to remember that the purpose of public polling of this sort is to facilitate a public conversation about public affairs and elections," he said. A public-facing poll, he added, is not like a scientific paper, trying "to produce the most precise estimate and statements of confidence and hypothesis test."

While that argument may not convince all polling critics, some analysts also caution that even metrics that reflect uncertainty don't necessarily help people evaluate polls more soberly. "The problem that I've seen in a ton of my research is just that people are very good at suppressing uncertainty, even when you give them something like a probability," said Jessica Hullman, a professor of computer science and journalism at Northwestern University who studies representations of data, including in election forecasting.

The basic problem, she said, goes beyond any one metric — not least because many people approach polls with high degrees of motivated thinking. It's "the fact that people are so hard-wired to want answers when they're looking to polls, when they're looking to election forecasts," Hullman said. Many people, she added, "are consulting them because they want some information to make them feel less anxious about the future."

Hullman, though, does think statistical literacy can improve, albeit slowly. She praises people like Nate Silver, the prominent election forecaster, who essentially combine their election forecasts with miniature lessons in statistics, and who focus their analysis on the inherent uncertainty of polling. Over the past decade, she said, she has seen many people become more sophisticated at interpreting polls and forecasting models.

"I do think readers can get used to things," she said. "And I think uncertainty is something that, as a society, we are slowly getting better at."

This article was originally published on Undark. Read the original article.

Shares