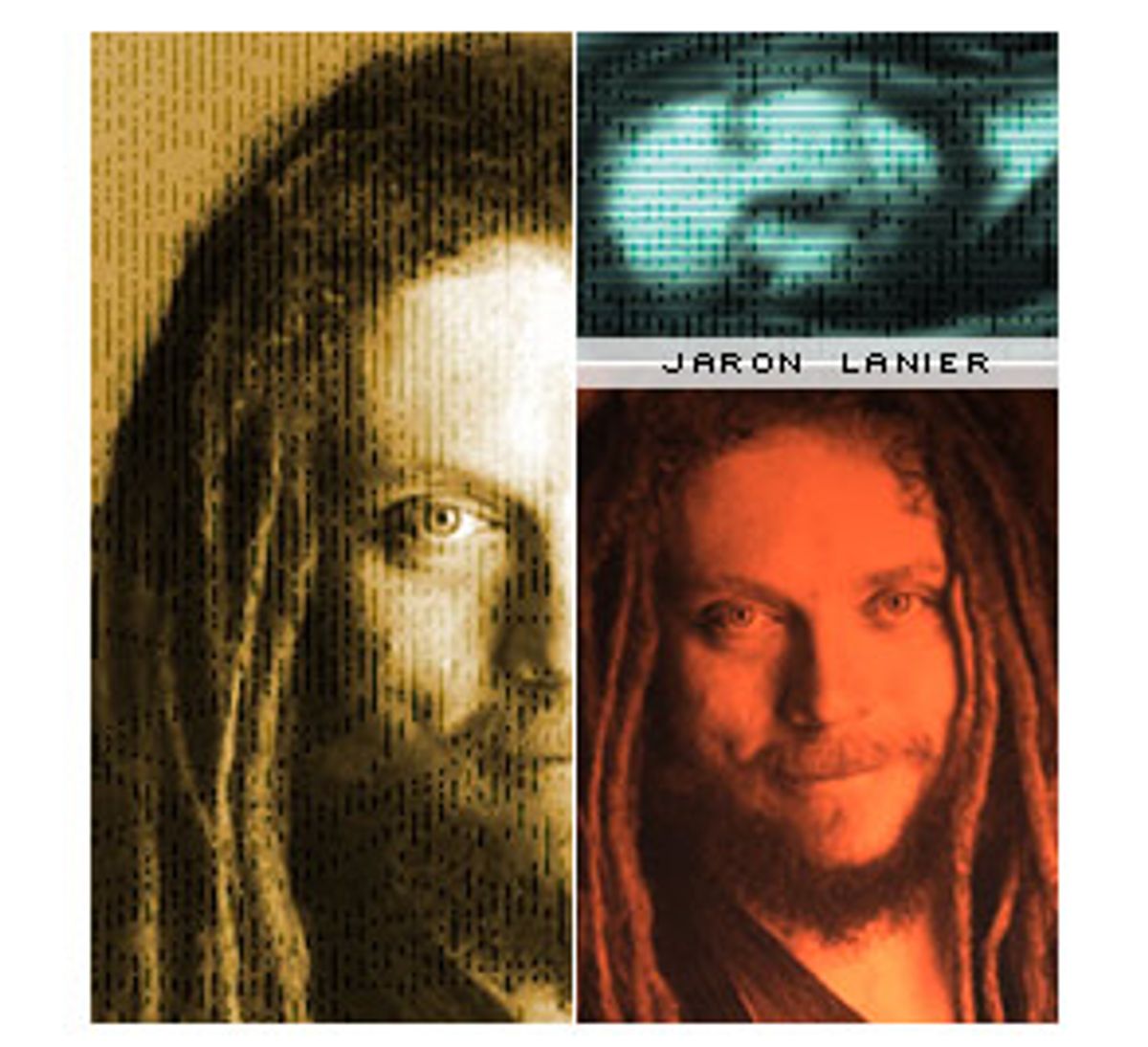

Jaron Lanier entered college at age 15. Before turning 25, he coined the term "virtual reality," landed on the cover of Scientific American and subsequently became a hot pop-culture commodity -- a dreadlocked, blue-eyed visionary, media darling and inspiration to geeks everywhere.

But now, in his 39th year, Lanier has turned sour on his own futuristic visions. Lanier recently published "One-half a Manifesto" at Edge.org, an online intellectual forum. The 9,000-word treatise rebukes the "resplendent dogma" of today's au courant visionaries: the irrational belief "that biology and physics will merge with computer science." The essay also takes techno-titans such as the roboticist Hans Moravec to task for working to create thinking, self-replicating machines while ignoring the fact that such research will only "cause suffering for millions of people."

Lanier's turnaround is impressive. He is, after all, a man who spent much of the last 20 years telling us that the real world would merge with the virtual, creating new forms of community that would enhance the quality of our lives. The one-time evangelist has suddenly become a skeptic.

"My world has gone nuts for liking computers too much and not seeing them clearly for what they are," he says in an interview with Salon about the essay.

What they are, Lanier argues, is far from the omnipotent engines of destruction envisioned by other scientists-turned-cautionaries such as Sun Microsystems' Bill Joy. Nor are they saviors, declares Lanier. Neither the evil nanobots of Joy's nightmare, nor the poverty-curing "mind children" that Moravec envisions are possible, says Lanier. Simply put, software just won't allow it. Code can't keep up with processing power now, and it never will.

"Software is brittle," he says. "If every little thing isn't perfect, it breaks. We have to have an honest appreciation for how little progress we've made in this area."

On the surface, Lanier's stance appears to resemble that of Joy, the influential programmer who used the pages of Wired magazine to condemn Moravec and others for desiring to build sentient machines without acknowledging the apocalyptic dangers. But Lanier ultimately takes quite a different tack. Joy condemned science for what it could do; Lanier condemns it for failing to recognize what it can't. Lanier's upstart argument yields a uniquely here-and-now version of computer science ethics and an entirely different, but equally frightening form of what Lanier calls "Bill's version ... of the Terror."

Lanier didn't always play the skeptic. He was once a believer -- convinced that it was possible to create perfect computers and cure software of its tendency to break rather than bend.

"During my 20s, I definitely believed I could crack this problem," he says. "I spent a lot of time on trying to make something that didn't have the unwieldiness of software, the brittleness of software."

He failed. The artificial intelligence pioneer Marvin Minsky mentored Lanier, but the relationship never led to a breakthrough. Even Lanier's brainchild -- virtual reality programming language, or VPL -- often crashed. VPL may have been the heart and soul of virtual reality, the basis for gloves, masks, games and more, but it still broke down. It was great code, but it was just code. It wasn't perfect.

"I ultimately didn't pull it off," Lanier says.

The failure didn't crush Lanier: It just made him wiser. He started to see that Moore's Law (which dictates that processing power will double every 18 months) is not enough; that processing power without perfect software cannot create AI.

Still, he didn't speak up. "All ambitious computer scientists in their 20s think they are going to crack it," he says, relishing their exuberance with a raised voice. "And why shouldn't they?"

So he watched with simple amusement as his contemporaries continued blindly to believe what Lanier knew to be false; he watched as these futurists -- or "cybernetic totalists" as he calls them now -- focused on extremes, on experiments in academic labs or on books of boosterism like Eric Drexler's "Unbounding the Future: The Nanotechnology Revolution."

He remained silent. Even when Thomson-CSF, a French electronics company, stripped him of his virtual reality patents and ousted him (with good reason, some contend) from the company in 1992, Lanier did not make a fuss. He just went back to work on the electronic music he loved and on other forms of computer science, figuring his contemporaries would eventually learn their lesson too. Their dreams were harmless, he thought.

Besides, their wild visions "didn't matter then because there was only this obscure group of people who believed it," he says.

Then the Internet exploded. Suddenly the technologists Lanier had been indulgently dismissing -- his "quirky, weird friends with strange beliefs" -- became Zeitgeist heroes. Those wacky ideas suddenly exited the confines of Stanford and MIT and became seeds for attracting venture capital.

Lanier first noticed the dangers inherent in the mass marketing of half-baked artificial intelligence concepts while using Microsoft Word. All he wanted to do was abbreviate a name he had cooked up -- "tele-immersion." "It's a cross between virtual reality and a transporter booth," he says, a strategy for employing the Internet to bring people together in a computer-generated, 3-D space.

Lanier simply wanted to write the word "tele-immersion" as "tele-i." And when Word wouldn't let him do it, capitalizing the "i" repeatedly, Lanier found himself frustrated, even angry. Though he knows how to turn it off, he claims most users don't. And he's still none too pleased.

"This crazy artificial intelligence philosophy which I used to think of as a quirky eccentricity has taken over the way people can use English," he says. "We've lost something."

And it's not just Word that spun Lanier into a tizzy. It's the sheer prevalence of these "thinking" features: the fact that PowerPoint shrinks the font when you add too many words, that browsers add complete URLs after the user puts in three letters, and that there's little that most people can do about it.

"[Programmers] are sacrificing the user in order to have this fantasy that the computers are turning into creatures," he says. "These features found their way in not because developers think people want them, but because this idea of making autonomous computers has gotten into their heads."

Not only do people not want them, suggests Lanier, but they won't work anyway. In the end, Joy may be saved by software's failures. Nanobots will not make humans "an endangered species," as the headline to Joy's article states; the White Plague -- a cloud of nanobot waste called "gray goo" in Eric Drexler's book, "Engines of Creation" -- will not descend on our world and kill us all, as Joy fears.

But that's not to say the future will be all hunky-dory. Lanier predicts a whole different set of dangers. We won't be annihilated, we'll just be further and further divided. It will be a Marxist class warfare nightmare, a more extreme version of what Lanier considers two of the present time's dominant characteristics: wild technological innovation and a widening gap between rich and poor.

Biotechnological advances, combined with the processing power increases demanded by Moore's Law, will result in the mixing of genetics, super-fast processors and various levels of flawed software. The rich will get good code and immortality, suggests Lanier. The middle class will gain a few extra years and the poor will simply remain horribly mortal.

"If Moore's Law or something like it is running the show, the scale of separation could become astonishing," he writes near the end of his essay. "This is where my Terror resides, in considering the ultimate outcome of the increasing divide between the ultra-rich and the merely better off."

"With the technologies that exist today, the wealthy and the rest aren't all that different; both bleed when pricked," writes Lanier "But with the technology of the next 20 or 30 years they might become quite different indeed. Will the ultra-rich and the rest even be recognizable as the same species by the middle of the new century?"

Lanier's pessimism might seem to contradict his earlier argument that software's inherent flaws will come to humanity's rescue. But Lanier says there is no contradiction: He's simply taking one likely scenario to its final ending point, an end that acknowledges the weakness of software.

So should we just stop researching controversial technologies, as Joy suggests? Lanier's critics say no. George Dyson, author of "Darwin among the Machines," responded to Lanier's essay on Edge.org by noting that the glitchy nature of software is just the "primordial soup" of technology "that proved so successful in molecular biology." Instead of condemning the mess, he writes, "let us praise sloppy instructions, as we also praise the Lord."

Rodney Brooks, director of the MIT Artificial Intelligence Laboratory, suggests that Lanier abandon the notion "that we are different from machines in some fundamental, ineffable way" and accept the fact that all matter is essentially mechanical. And Kevin Kelly -- a founding editor of Wired Magazine -- wrote in his own response on the Edge Web site that, flawed or not, "right now almost anything we examine will yield up new insights by imagining it as computer code."

Even Lanier admits that the doom-and-gloom scenario in his essay's conclusion may not occur. "I'm an optimist," he says. "I believe we can avoid it." Indeed, the manifesto is only "one-half" because the other half of Lanier would have to include all the positive aspects of science -- the "lovely global flowering of computer culture," he writes -- that can be seen all over the Web. It's found in the community of Napster, he says, just as it's visible in the open-source software movement. And it can be seen in his own work on tele-immersion, which he says "will be bigger than the telephone" -- in about 10 to 20 years.

It's the notion that the computer is inherently superior to humans that Lanier wants to combat. He's lost his faith in computers and, like a present-day Nietzsche, he'd like the rest of the world to recognize that this 21st century God is dead.

"I'd like to address the intermediate people, the ones who are really designing the software that people will use," he says. "And I would say please repudiate this notion that the computer could have an opinion and can be your peer. Instead, put the person in charge ... [B]ecause if the tools people use to express themselves and do their work are written by people who have a certain ideology, then it is going to bleed through to everyone. When you have a generation who believes that a computer is an independent entity that's on its way to becoming smarter and smarter, then your design aesthetic shifts so that you further its progress toward that goal. That's a very different design criteria than just making something that's best for the people."

"There should be a sense of serving the user explicitly stated," he says, his high-pitched voice rising. "There should be a Hippocratic oath. The reason the Hippocratic oath exists is to place the priority on helping individual people rather than medical science in the abstract. As a physician it would be wrong to choose furthering your agenda of future medicine at the expense of a patient. And yet computer science thinks it's perfectly fine to further its agenda of trying to make computers autonomous, at the expense of everyday users."

"It's immoral," he adds. "I want to see humanistic computer science. You have to be human-centric to be moral."

Shares