Facebook does such a horrific job of figuring out what kinds of ads to show me that I suppose we should all be delighted to learn that the company has formed an artificial intelligence task group to algorithmically derive meaning from our newsfeed posts. The goal, according to Technology Review, is to "perhaps boost the company’s ad targeting."

My initial temptation is to tell Facebook to go for it! Knock it out of the park! I mean, seriously, of the five sponsored advertisements on the right column of my Facebook home page this morning, one offered to help "improve reading and comprehension skills," another assumed I was "remarried with children?" (I'm not) and suggested counseling by telephone, and a third pushed an MBA program at St. Mary's that would teach me to "learn how to make holistic & strategic decisions focused on corporate sustainability." Facebook, your reading and comprehension skills need help. Maybe Skynet can offer some assistance.

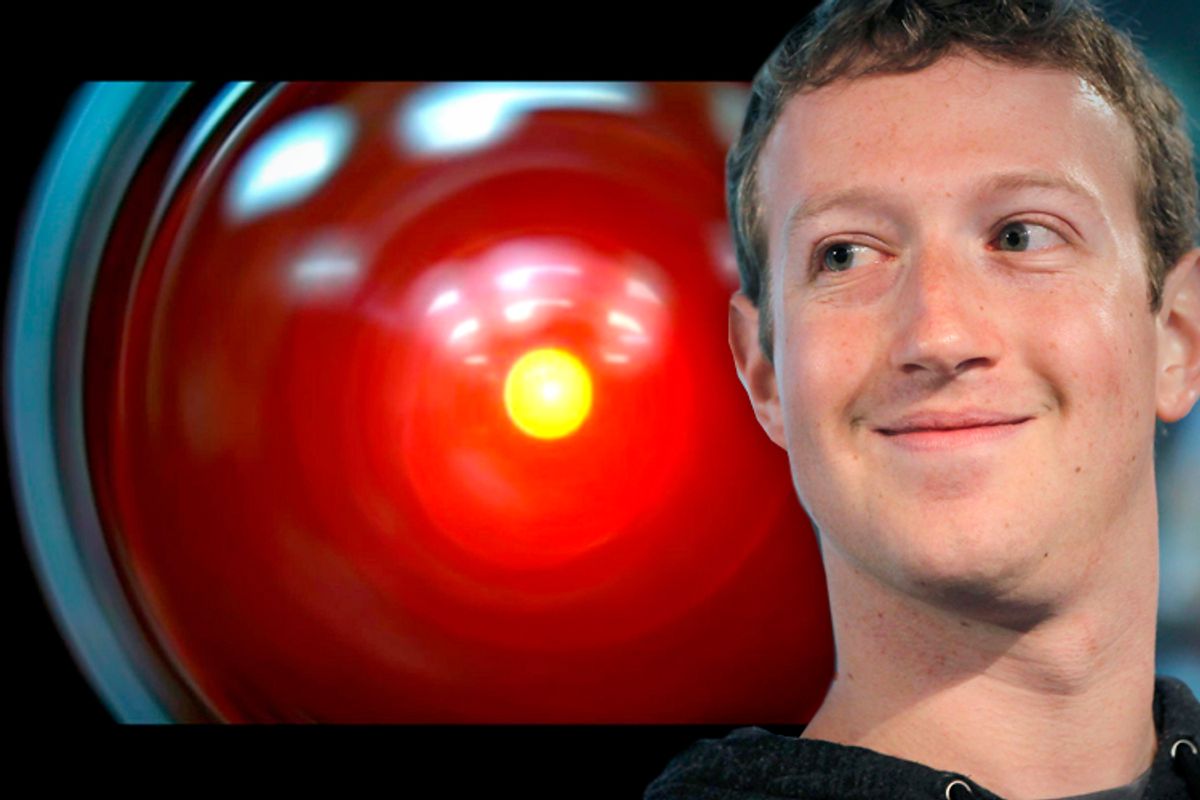

I would (grudgingly) concede that the "dating for cyclists" ad was properly targeted if it were not for the fact that for years I have been ruthlessly marking every dating ad Facebook shows me as "offensive" in a clearly hopeless campaign to teach Mark Zuckerberg that I am not looking for romance from a Facebook advertisement. Artificial intelligence? Please!

The notion that the cutting edge of artificial intelligence is being propelled, at least in part, by a corporate desire to improve the efficacy of advertising tells us probably as much as we need to know about how late capitalism and technological innovation mutually reinforce each other in the 21st century. "Deep learning" -- as described by Technology Review, "uses simulated networks of brain cells to process data." That sounds very cool, until you realize that the goal is to help advertisers move more units.

A parallel objective appears to be to show Facebook users only the newsfeed posts that they really want to see.

Facebook’s chief technology officer, Mike Schroepfer, [says] that one obvious place to use deep learning is to improve the news feed, the personalized list of recent updates he calls Facebook’s “killer app.” The company already uses conventional machine learning techniques to prune the 1,500 updates that average Facebook users could possibly see down to 30 to 60 that are judged to be most likely to be important to them. Schroepfer says Facebook needs to get better at picking the best updates due to the growing volume of data its users generate and changes in how people use the social network.

Great. So the same techniques that result in offers to help me with my reading comprehension are also currently responsible for picking and choosing which newsfeed updates I see. That's not encouraging. But I suppose that if I am to adhere to the same principle that says better-targeted ads will be more acceptable, I should concede that more "interesting" updates via savvy AI would also be acceptable.

Except I don't. I don't want Facebook to arrogate to itself the right to decide what newsfeed updates to show me. I choose whom to follow or friend of my own free will. If I get bored, I reserve the right to unfollow or unfriend or downgrade upon my own fickle whim. I don't want a simulated network of faux-Andrew Leonard brain cells making their own decisions as to what will please me. Where's the serendipity in that? Where's my autonomy?

There's a fundamental problem at the heart of the notion that "deep learning" derived from crunching a ton of big data and processing it with neural networks will figure out what we really like and really don't like. And that's the ultimate consequence of heading down that road: Permanent residence in an information environment that is nothing more than a perfectly constructed echo chamber in which we only hear what we want to hear and only see what we want to see. A world in which we are essentially living in our own heads, without fear of contradiction or irritation.

It will be the worst kind of nanny-state, the one in which we are smothered by our own reflection. And Facebook is building it.

Shares