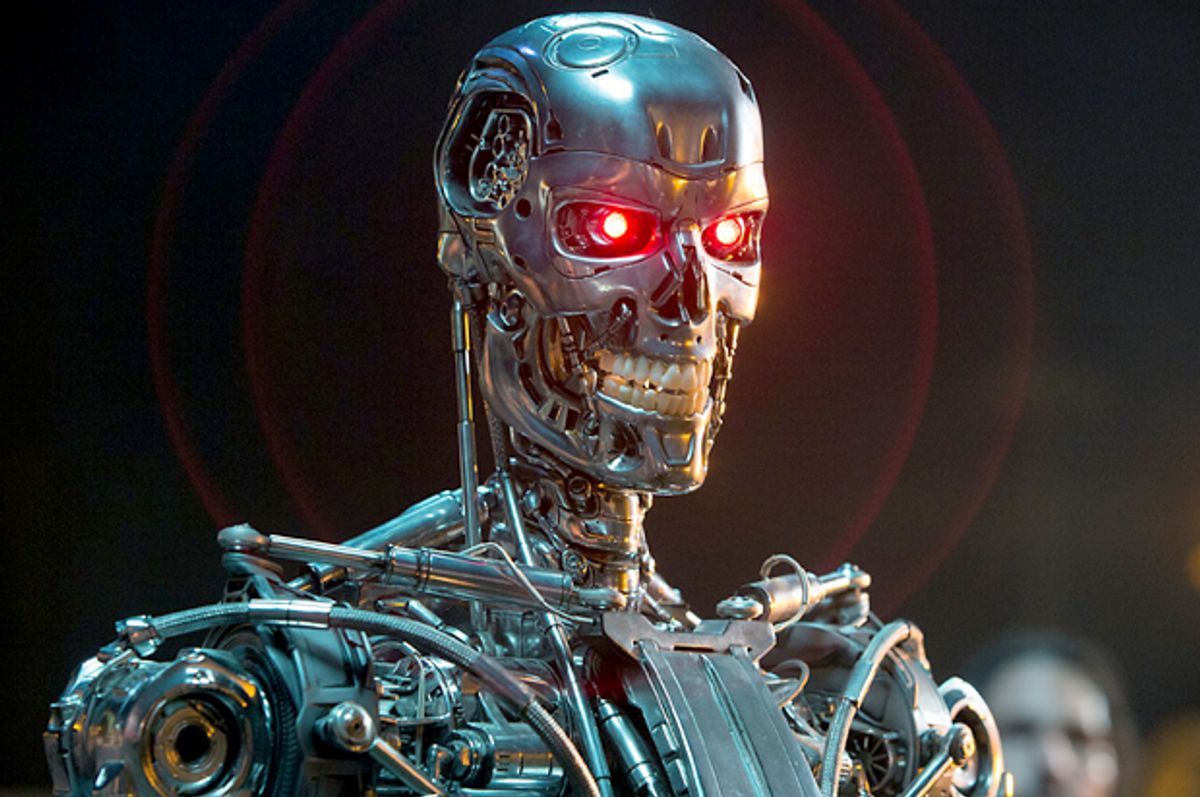

Guillaume Lample and Devendra Singh Chaplot, two researchers at Carnegie Mellon, recently announced that they had created an artificial intelligence in "Doom" deathmatch capable of killing human players in deathmatch scenarios — a situation of the very sort Isaac Asimov's Laws of Robotics was intended to prevent.

The First Law is that "a robot may not injure a human being, or through inaction, allow a human being to come to harm" — a law that Lample and Chaplot's research clearly violates. According to their paper, their model is "is trained to simultaneously learn these features along with minimizing a Q-learning objective, which is shown to dramatically improve the training speed and performance of our agent. Our architecture is also modularized to allow different models to be independently trained for different phases of the game. We show that the proposed architecture substantially outperforms built-in AI agents of the game as well as humans in deathmatch scenarios."

At the moment, the AI can only kill humans on the version of Mars within "Doom," but as Dave Gershgorn noted on Twitter:

AI researchers spend so much time convincing people that their AI won't kill us, then research mowing people down in a game called "Doom"

— Dave Gershgorn (@davegershgorn) September 21, 2016

The danger here isn't that an AI will kill random characters in 23-year-old first-person shooter games, but because it is designed to navigate the world as humans do, it can easily be ported. Given that it was trained via deep reinforcement learning which rewarded it for killing more people, the fear is that if ported into the real world, it wouldn't be satisfied with a single kill, and that its appetite for death would only increase as time went on.

Shares