As a psychologist researching misinformation, I focus on reducing its influence. Essentially, my goal is to put myself out of a job. ![]()

Recent developments indicate that I haven’t been doing a very good job of it. Misinformation, fake news and “alternative facts” are more prominent than ever. The Oxford Dictionary named “post-truth” as the 2016 word of the year. Science and scientific evidence have been under assault.

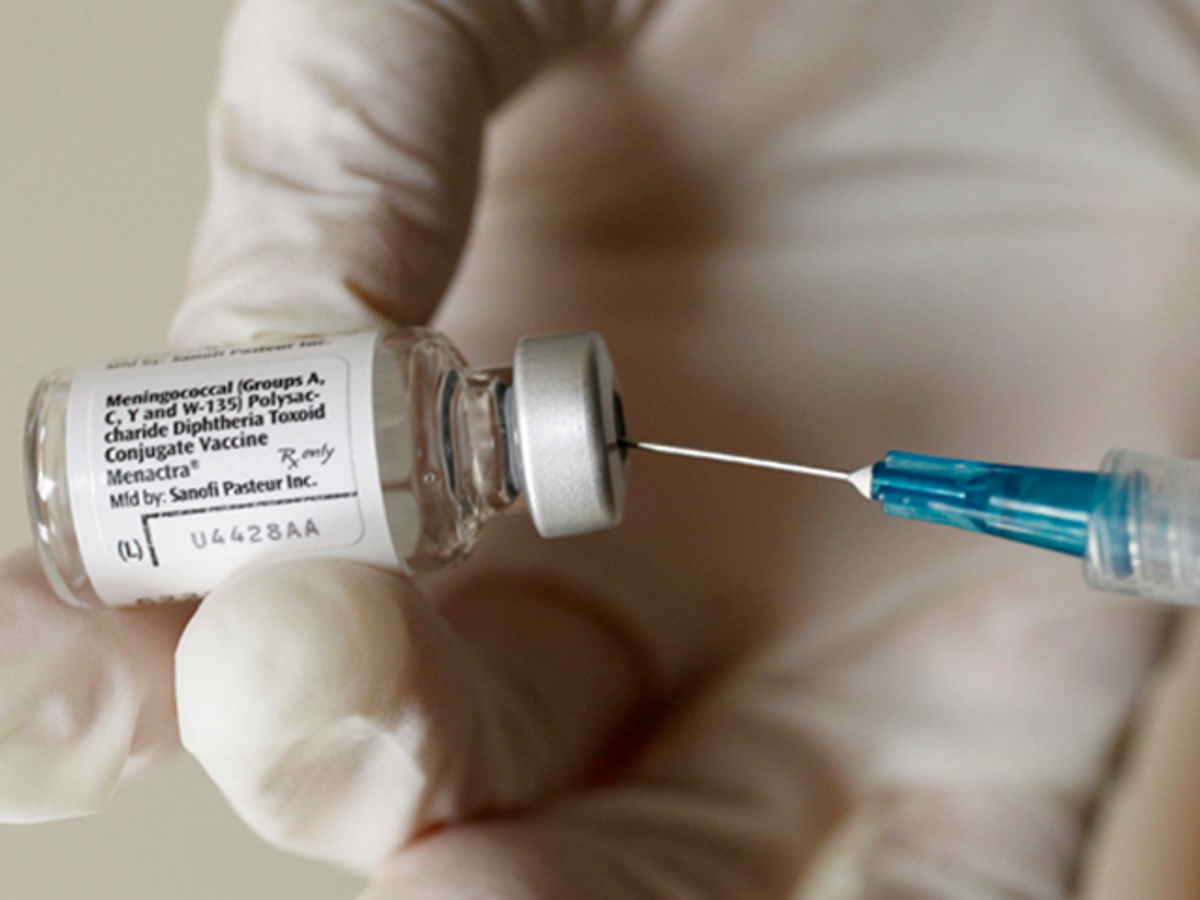

Fortunately, science does have a means to protect itself, and it comes from a branch of psychological research known as inoculation theory. This borrows from the logic of vaccines: A little bit of something bad helps you resist a full-blown case. In my newly published research, I’ve tried exposing people to a weak form of misinformation in order to inoculate them against the real thing — with promising results.

Two ways misinformation damages

Misinformation is being generated and disseminated at prolific rates. A recent study comparing arguments against climate science versus policy arguments against action on climate found that science denial is on the relative increase. And recent research indicates these types of effort have an impact on people’s perceptions and science literacy.

A recent study led by psychology researcher Sander van der Linden found that misinformation about climate change has a significant impact on public perceptions about climate change.

The misinformation they used in their experiment was the most shared climate article in 2016. It’s a petition, known as the Global Warming Petition Project, featuring 31,000 people with a bachelor of science or higher, who signed a statement saying humans aren’t disrupting climate. This single article lowered readers’ perception of scientific consensus. The extent that people accept there’s a scientific consensus about climate change is what researchers refer to as a “gateway belief,” influencing attitudes about climate change such as support for climate action.

At the same time that van der Linden was conducting his experiment in the U.S., I was on the other side of the planet in Australia conducting my own research into the impact of misinformation. By coincidence, I used the same myth, taking verbatim text from the Global Warming Petition Project. After showing the misinformation, I asked people to estimate the scientific consensus on human-caused global warming, in order to measure any effect.

I found similar results, with misinformation reducing people’s perception of the scientific consensus. Moreover, the misinformation affected some more than others. The more politically conservative a person was, the greater the influence of the misinformation.

This gels with other research finding that people interpret messages, whether they be information or misinformation, according to their preexisting beliefs. When we see something we like, we’re more likely to think that it’s true and strengthen our beliefs accordingly. Conversely, when we encounter information that conflicts with our beliefs, we’re more likely to discredit the source.

However, there is more to this story. Beyond misinforming people, misinformation has a more insidious and dangerous influence. In the van der Linden study, when people were presented with both the facts and misinformation about climate change, there was no net change in belief. The two conflicting pieces of information canceled each other out.

Fact and “alternative fact” are like matter and antimatter. When they collide, there’s a burst of heat followed by nothing. This reveals the subtle way that misinformation does damage. It doesn’t just misinform. It stops people believing in facts. Or as Garry Kasporov eloquently puts it, misinformation “annihilates truth.”

Science’s answer to science denial

The assault on science is formidable and, as this research indicates, can be all too effective. Fittingly, science holds the answer to science denial.

Inoculation theory takes the concept of vaccination, where we are exposed to a weak form of a virus in order to build immunity to the real virus, and applies it to knowledge. Half a century of research has found that when we are exposed to a “weak form of misinformation,” this helps us build resistance so that we are not influenced by actual misinformation.

Inoculating text requires two elements. First, it includes an explicit warning about the danger of being misled by misinformation. Second, you need to provide counterarguments explaining the flaws in that misinformation.

In van der Linden’s inoculation, he pointed out that many of the signatories were fake (for instance, a Spice Girl was falsely listed as a signatory), that 31,000 represents a tiny fraction (less than 0.3 percent) of all U.S. science graduates since 1970 and that less than 1 percent of the signatories had expertise in climate science.

In my recently published research, I also tested inoculation but with a different approach. While I inoculated participants against the Petition Project, I didn’t mention it at all. Instead, I talked about the misinformation technique of using “fake experts” — people who convey the impression of expertise to the general public but having no actual relevant expertise.

I found that explaining the misinformation technique completely neutralized the misinformation’s influence, without even mentioning the misinformation specifically. For instance, after I explained how fake experts have been utilized in past misinformation campaigns, participants weren’t swayed when confronted by the fake experts of the Petition Project. Moreover, the misinformation was neutralized across the political spectrum. Whether you’re conservative or liberal, no one wants to be deceived by misleading techniques.

Putting inoculation into practice

Inoculation is a powerful and versatile form of science communication that can be used in a number of ways. My approach has been to mesh together the findings of inoculation with the cognitive psychology of debunking, developing the Fact-Myth-Fallacy framework.

This strategy involves explaining the facts, followed by introducing a myth related to those facts. At this point, people are presented with two conflicting pieces of information. You reconcile the conflict by explaining the technique that the myth uses to distort the fact.

We used this approach on a large scale in a free online course about climate misinformation, Making Sense of Climate Science Denial. Each lecture adopted the Fact-Myth-Fallacy structure. We started by explaining a single climate fact, then introduced a related myth, followed by an explanation of the fallacy employed by the myth. This way, while explaining the key facts of climate change, we also inoculated students against 50 of the most common climate myths.

For example, we know we are causing global warming because we observe many patterns in climate change unique to greenhouse warming. In other words, human fingerprints are observed all over our climate. However, one myth argues that climate has changed naturally in the past before humans; therefore, what’s happening now must be natural also. This myth commits the fallacy of jumping to conclusions (or non sequitur), where the premise does not lead to the conclusion. It’s like finding a dead body with a knife poking out of its back and arguing that people have died of natural causes in the past, so this death must have been of natural causes also.

Science has, in a moment of frankness, informed us that throwing more science at people isn’t the full answer to science denial. Misinformation is a reality that we can’t afford to ignore — we can’t be in denial about science denial. Rather, we should see it as an educational opportunity. Addressing misconceptions in the classroom is one of the most powerful ways to teach science.

It turns out the key to stopping science denial is to expose people to just a little bit of science denial.

John Cook, Research Assistant Professor, Center for Climate Change Communication, George Mason University

Shares