If financial crises came with their own nicknames, the subprime meltdown surely would have earned the sobriquet “worst financial crisis since the Great Depression.” That mantra has been chanted over and over again, not only by leading academics and media pundits, but at the highest levels of policy making. When President Barack Obama selected Christina Romer, an economic historian from the University of California, Berkeley, as chair of his Council of Economic Advisors, some carped that the president should have selected an economist with a deeper background in policy rather than in economic history. When Romer herself later asked President Obama’s chief of staff Rahm Emanuel why she got the job, Emmanuel answered: “You’re an expert on the Great Depression, and we really thought we might need one.” Given how close the subprime crisis came to economic Armageddon, it is important to understand the series of policy mistakes behind it.

For the most part, previous chapters in this book have focused on particular policies that have had disastrous results, rather than on economic disasters and the policies that led to them. The three policy failures of the interwar period—German reparations after World War I, the interwar gold standard, and the increasing trade protectionism of the 1930s—each merited its own chapter instead of being discussed in a single chapter on the Great Depression. The approach adopted here is different. Rather than starting with a failed economic policy, this chapter starts with a disaster—the subprime crisis—and examines the policies that contributed to it.

There are good reasons for proceeding in this way. The interwar policies discussed earlier had their origins in different countries and occurred under widely differing circumstances. World War I reparations resulted from fears that an isolationist United States would not be able to counter future German aggression, the heavy burdens of inter-Allied debts, and a French desire to punish Germany. The worldwide return to the gold standard was galvanized by the British precedent, which was based on a desire to return to the monetary “normalcy” of the nineteenth century. And the rise of trade protectionism, spurred by the United States’s Smoot-Hawley tariff, was a response to the beginnings of a global economic downturn and domestic political factors. By contrast, the subprime crisis originated almost entirely in the United States, although it subsequently spread far and wide. The main culprits behind the crisis were ill-conceived and ideologically motivated fiscal and monetary policies, which were aided and abetted by inadequate regulation and a variety of other policy mistakes.

*

Despite the severity of both the Great Depression and the subprime crisis, neither was completely unprecedented. Banking crises were common during the nineteenth and early twentieth centuries: there were more than 60 such crises in the industrialized world during 1805–1927. Many of these crises shared a common “boom-bust” pattern. Boom-bust crises occur when business cycles—the regular, normally moderate upward and downward movements in economic activity—become exaggerated, leading to a spectacular economic expansion followed by a dramatic collapse. Boom-bust crises play a central role in formal models of financial crises dating back at least as far as Yale economist Irving Fisher, who wrote about them in the 1930s. In Fisher’s telling, economic expansion leads to a growth in the number and size of bank loans—and even the number of banks themselves—and a corresponding increase in borrowing by non-bank firms. As the expansion persists, bankers continue to seek profitable investments, even though as the boom progresses and more investment projects are funded, fewer worthwhile projects remain. The relative scarcity of sound projects does not dissuade eager lenders, however, who continue to dole out funds. Fisher laments this excessive buildup of debt during cyclical upswings: “If only the (upward) movement would stop at equilibrium!” But, of course, it doesn’t.

When the economic expansion ends, the weakest firms—typically those that received loans later in the expansion—have difficulty repaying and default. Loan defaults lead to distress among the banks that made the now-impaired loans, panic among the depositors who entrusted their savings to these banks, and a decline in the wealth of bank shareholders. Even if no depositor panic ensues, banks under pressure will become more defensive by reducing their loan portfolio, both by making fewer new loans and by refraining from renewing outstanding loans that have reached maturity. Banks and individuals will sell securities in an attempt to raise cash, leading to declines in stock and bond markets. The contraction in the availability of loans will hurt firms and further exacerbate the business cycle downturn. The deepening contraction will lead to more loan defaults and bank failures, which will further depress security prices and worsen the business cycle downturn that is already underway.

Two other distinctive features characterize boom-bust crises. First, the economic expansion is typically fueled by cheap and abundant credit. That is, bankers have a lot of money to lend, which they are willing to lend at low interest rates. This often occurs when the central bank follows a low interest rate policy. In earlier times, when gold constituted a substantial portion of the money stock, credit expansion could be the result of gold discoveries or gold imports, which made loanable funds plentiful and cheap. It could also be a consequence of an increased willingness on the part of banks to lend the funds that they already have on hand, or from the emergence of new ways of lending money, including the creation of new types of securities. Second, boom-bust cycles are often accompanied by increased speculation in a particular asset or class of assets, the price of which, fed by cheap and abundant credit, rises dramatically during the course of the boom—and then collapses catastrophically during the bust.

The scenario described above aptly describes many nineteenth and twentieth century crises. For example, England suffered crises in 1825, 1836–39, 1847, 1857, 1866, and 1890. Each of these was preceded by several years of rapid economic growth, frequently fueled by gold imports and accompanied by increased speculation. The object of speculation varied from crisis to crisis and included at different times grain, railroads, stocks, and Latin American investments. Similar stories can be told about US crises of 1837, 1857, 1873, 1893, and 1907, and many others in Australia, Canada, and Japan, and across Western Europe.

As the world economy became more interconnected during the course of the nineteenth century, increasing numbers of these crises took on an international character. During the weeks following the outbreak of a panic in London in August 1847, business failures spread outward from London to the rest of Britain, to British colonies, and to continental and American destinations. Similarly, the 1857 crisis, which started in the United States, soon spread to Austria, Denmark, and Germany. The even more severe crises of the mid-1870s, early 1890s, 1907, and early 1920s, not to mention the Great Depression of the 1930s, also traveled extensively.

It is impossible to identify precisely when the run-up to the sub-prime crisis began. According to the National Bureau of Economic Research, the private academic organization that is the arbiter of when business cycle expansions and contractions begin and end, the longest-ever US business cycle expansion began in March 1991 and lasted until March 2001. Following an eight-month downturn—among the shortest in US history—the business cycle turned upward again in November 2001. That expansion ended late in 2007, about the same time that the subprime meltdown began. The boom, like countless others before, was fed by expansionary fiscal and monetary policies.

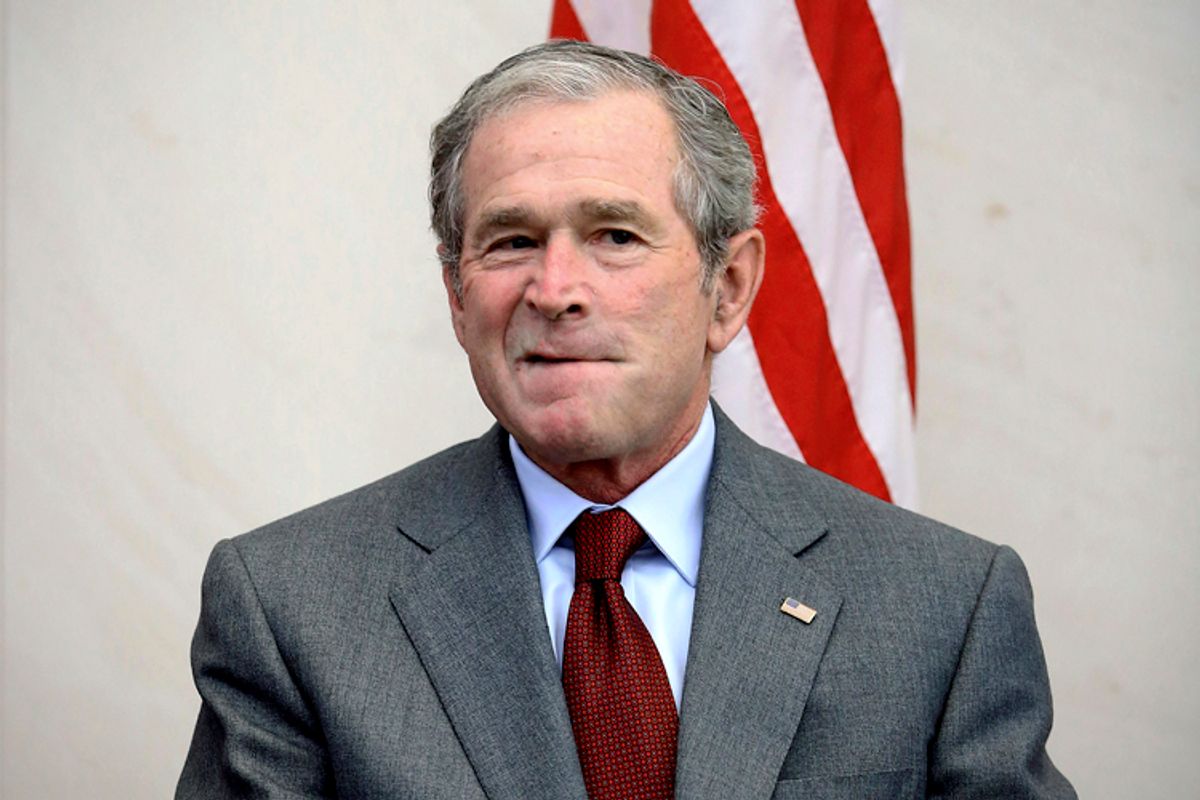

The business cycle expansion that began in 2001 was given a substantial boost by a series of three tax cuts during the first three years of the administration of President George W. Bush. President Bush was ideologically committed to lower taxes and had pledged, if elected, to cut taxes. In his acceptance speech to the Republican National Convention in Philadelphia on August 3, 2000, Bush said:

Today, our high taxes fund a surplus. Some say that growing federal surplus means Washington has more money to spend. But they’ve got it backwards. The surplus is not the government’s money. The surplus is the people’s money. I will use this moment of opportunity to bring common sense and fairness to the tax code. And I will act on principle. On principle...every family, every farmer and small businessperson, should be free to pass on their life’s work to those they love. So we will abolish the death tax. On principle...no one in America should have to pay more than a third of their income to the federal government. So we will reduce tax rates for everyone, in every bracket.

Supported by a Republican majority in the House of Representatives and a nearly evenly divided Senate, Bush signed into law the Economic Growth and Tax Relief Reconciliation Act of 2001 (EGTRRA), the Job Creation and Worker Assistance Act of 2002, and the Jobs Growth and Tax Relief Reconciliation Act of 2003. These laws lowered tax rates in all brackets, reduced taxes on capital gains and some dividends, and increased a variety of exemptions, credits, and deductions. Some of the tax cuts, which were to be phased in under EGTRRA, were accelerated under the 2003 legislation.

Further fiscal stimulus was provided by the invasion of Afghanistan the month following the terrorist attacks on September 11, 2001 and the war in Iraq, which began in March 2003. The war led to the deployment of 5,200 US troops in Afghanistan during the fiscal year 2002; by 2008, the combined in-country forces in Afghanistan and Iraq had reached nearly 188,000. Thanks in large part to the collapse of the Soviet Union, US military spending had been relatively flat in the 1990s, never exceeding $290 billion in any year. By 2008, annual military spending had more than doubled, to $595 billion.

President Bush was faithful to his pledge to eliminate the federal government’s budget surplus. The net effect of the tax cuts, combined with the additional expenditure related to the overseas conflicts, turned the federal government’s $236 billion surplus in 2000 to a $458 billion deficit by 2008. This fiscal stimulus encouraged more spending by households and firms and contributed to the economic boom that lasted from 2001 to 2007.

The boom was also fueled by expansionary monetary policy, as the Federal Reserve kept interests rates low for a prolonged period. At the end of 2000, the federal funds rate, a key indicator of Federal Reserve monetary policy, stood at 6.5 percent. Following a sharp decline in stock prices in March 2001, primarily the result of the collapse of internet stocks (the “dot-com bubble”), the Federal Reserve lowered interest rates swiftly and dramatically in an effort to cushion the effects of the fall on the wider economy. By the end of March 2001, the federal funds rate had been reduced to 5 percent, and by June it was 4 percent. In August it stood at 3.5 percent, and was further reduced following the terrorist attacks of September 11. By the end of 2001, the federal funds rate was below 2 percent—its lowest level in more than 40 years. The rate remained at or below 1 percent for nearly a year during 2003–04, and below 2 percent until the middle of November 2004. The low interest rate policy, especially during 2003–04, helped to ignite a speculative boom.

The Fed kept interest rates low for two reasons. First, employment had recovered more slowly than expected from the 2001 recession, the so-called jobless recovery, indicating that a more prolonged period of lower interest rates was needed to stimulate the economy. Second, Fed policy makers were concerned that the country might fall into a Japanese-style “lost decade” if they did not make a clear and convincing case through bold actions that low interest rates would persist as long as required to boost the economy. A less charitable view holds that Fed chairman Alan Greenspan’s motives were less economic and more ideological and self-serving—supporting easy monetary policy to increase the re-election prospects of fellow Republican George W. Bush, who was locked in a tight re-election race, or to curry favor with the administration so that he would be reappointed Fed chairman when his term expired after the presidential election. And, in fact, President Bush did nominate Greenspan for an unprecedented fifth term in May 2005. Whatever the reason for the prolonged monetary easing, it was crucial to the development of the boom.

As previously noted, boom-bust cycles are often accompanied by increased speculation in a particular asset or class of assets. During the subprime meltdown, the object of speculation was real estate. Housing prices, which had risen by about 25 percent during the entire decade of the 1990s, more than doubled between 2000 and 2006. The amount of debt undertaken to finance housing purchases also increased dramatically. Although expansionary fiscal and monetary policies undoubtedly fueled the explosion in house prices, the boom was enabled by a variety of legal and financial developments that eased constraints on the housing finance market, particularly the subprime mortgage market.

Subprime mortgages are housing loans made to less creditworthy borrowers. They include loans to borrowers who have a history of late payments or bankruptcy, who are not able to document their income sufficiently, or who are able to make only a small down payment on the property to be purchased. Because subprime loans are considered risky, financial institutions will only make them if they can charge higher interest rates than they charge more creditworthy borrowers. Subprime mortgage lending became more common in the 1980s when federal legislation deregulated interest rates and preempted state interest rate ceilings, allowing lenders to issue higher-interest-rate mortgages. Subsequent legislation eliminated the tax deductibility of consumer credit interest, but permitted the continued deductibility of home mortgage interest on both primary and secondary residences. This encouraged consumers to engage in cash-out refinancing, that is, refinancing an existing mortgage with a new, larger loan and taking the difference in cash. The net effect was to shift what might otherwise have been credit-card debt to mortgage debt. When interest rates increased during the mid-1990s, reducing loan demand from high-quality borrowers, lenders sought out new opportunities among the less creditworthy, which contributed to a further increase in new subprime mortgage loans.

The expansion of the subprime market was aided by securitization, a process that involves bundling a group of individual loans together into a mortgage-backed security (MBS) and selling pieces of that security to investors. A benefit of securitization is that it makes it relatively easy for firms and individuals to invest in mortgages and therefore increases the overall amount of money available for mortgage lending. The average individual investor would have no interest in buying the mortgage loan on my house. Anyone who bought my loan would have to assume administrative costs such as billing, record keeping, and tax reporting. And even if I appear to be good credit risk and hence unlikely to default, the investor who bought my loan would risk a substantial loss if through some unforeseen event I was unable to make my mortgage payments. On the other hand, that average investor would be much more likely to buy a share of a pool of several thousand mortgages like mine, where the administrative costs could be divided up among many investors and the default of a few loans (out of thousands) would not jeopardize the value of the investment. In this way, securitization increased the funding available for housing finance.

Although mortgage securitization had been popularized in the 1980s, the securitized loans at that time were typically “conforming” mortgages, that is, of a standard size with a relatively credit-worthy borrower and high quality collateral. Securities backed by pools of conforming mortgages were eligible to be guaranteed by the quasi-government Federal National Mortgage Association (“Fannie Mae”) and Federal Home Loan Mortgage Corporation (“Freddie Mac”), and hence carried a low risk of default and, therefore, low interest rates. In an effort to increase home ownership by the less affluent during the 1990s, government policy encouraged Fannie Mae and Freddie Mac to increase the flow of mortgage lending to low and moderate income areas and borrowers, including relaxing lending standards. These policy actions gave a boost to the subprime market: subprime mortgage-backed securities made up 3 percent of outstanding MBSs in 2000 and 8.5 percent of all new issues; by 2006, they constituted 13 percent of outstanding MBSs and nearly 22 percent of new issues. More than 80 percent of all new subprime loans were financed by securitization during 2005 and 2006, up from about 50 percent in 2001.

The proliferation of subprime mortgage-backed securities led them to be used in a variety of even more complex securities, such as collateralized debt obligations (CDOs) and credit default swaps (CDSs). CDOs consist of a portfolio of debt securities (including subprime MBSs), which are financed by issuing even more securities. These securities are classified into higher-and lower-risk portions, called “tranches,” with higher-risk tranches providing higher returns. The tranches were assigned risk ratings by the credit ratings agencies Moody’s, Standard and Poor’s, and Fitch, which were harshly criticized in the wake of the crisis for having been overgenerous in their ratings. One of the main complaints against the ratings agencies is that since they are paid by issuers, who pay a lower interest rate if they receive a higher rating, they have an incentive to be overly generous in assigning ratings. As a managing director of Moody’s, one of the big-three ratings agencies, said anonymously in 2007: “These errors make us look either incompetent at credit analysis or like we sold our soul to the devil for revenue, or a little bit of both.”

Credit default swaps are contracts in which one party (the protection seller) agrees to pay, in return for a periodic fee, another party (the protection buyer) in the case of an “adverse credit event,” such as bankruptcy. The availability of CDSs encouraged financial firms to hold CDOs, since they believed that CDSs insured them against losses on their CDOs. However, even though a CDS sounds very much like conventional insurance, it is not. A life-insurance company issues many policies; since the likelihood of death is small for any given policy holder, claims against the insurer are typically paid off from the proceeds of premia paid by policy holders that do not die. CDSs involve no such pooling of risk, so the extent to which the protection seller is able to pay off in the case of an adverse event depends solely on the value of the assets—which might fall during a crisis—it has to back up the contract.

It is well beyond the scope of this chapter to explain the veritable alphabet soup of derivative securities—including CDO2s, synthetic CDOs, multisector CDOs, and cash flow CDOs—that emerged during this time. Even without details, it is clear that the development of these instruments, combined with elements of deregulation and misguided policy, made it easier and more profitable for less-creditworthy borrowers to obtain mortgage loans. Because of securitization and the attractive returns paid by these new securities, it was much easier for subprime-related securities to be sold in the United States and abroad. When the US housing boom collapsed, the value of many of these securities fell sharply and led to the collapse of institutions that held or had loaned money against them.

It is clear that there were misaligned incentives every step of the way toward the subprime crisis. Edmund Andrews, at the time a New York Times economics correspondent who was caught up in his own subprime nightmare, explained the chain of events.

My mortgage company hadn’t cared because it would sell my loan to Wall Street. Wall Street firms hadn’t cared, because they would bundle the loan into a mortgage-backed security and resell it to investors around the world. The investors hadn’t cared, because the rating agencies had given the securities a triple-A rating. And the rating agencies hadn’t cared, because their models showed that these loans had performed well in the past.

The collapse of the real estate market led to a sharp decline in the value of mortgage-backed securities that had supported it and widespread distress among financial institutions—in short: the type of bust that Irving Fisher had written about more than three-quarters of a century earlier.

*

An additional factor contributing to the growth of the speculative bubble was the absence of effective regulation and supervision of the new and complex securities and of the institutions that employed them. To explain the importance of government regulation, we must return to fear and greed. Virtually any financial decision can be framed in terms of these twin motives. Should I invest my life savings in a risky venture that will either make me a millionaire or put me in the poorhouse? Or should I restrict myself to only the bluest of blue chip stocks and the safest, if low yielding, bonds? Greed will steer me toward the former choice; fear to the latter. If there is a boom in risky ventures and their owners all seem to be making money hand over fist, my greed might get the better of my fear. Typically, the government doesn’t get involved in my decision-making process. I am free to choose to take as much risk as I see fit. The government maintains bankruptcy courts that will help my creditors carve up my assets if I do go bankrupt, but the government will not prevent me from making choices that might increase the probability that I become bankrupt.

Bankers are also subject to fear and greed. Because most of the funds that banks use are entrusted to them by depositors, governments establish rules to ensure that bankers’ greed does not overwhelm their fear. Among the most important of these rules are capital requirements. Capital requirements prevent banks from relying solely on deposits to fund their operations, forcing them also to use money that they and their shareholders put up. This money is known as capital. Capital requirements protect depositors in several ways. If a bank fails, capital can be used to pay off depositors. Additionally, holding capital encourages banks not to behave in an excessively risky way, so as not to jeopardize their own money. Capital also provides a concrete signal to depositors that the bank will not undertake excessive risk. The capacity of capital to reduce risk taking is sometimes described as putting banks in the position of having “skin in the game.” Governments have set bank capital requirements for many years, and in the Basel (1988), Basel II (2004), and Basel III (2010) Accords, an international consensus was reached on minimum capital standards. Under the Basel Accords, banks are required to hold proportionately more capital against inherently riskier assets.

In 1996, the Federal Reserve permitted banks to use CDSs as a substitute for bank capital under certain circumstances. Thus, holding CDSs for which the protection seller was a highly rated company, such as American International Group—which was bailed out by the Federal Reserve in 2008—allowed banks to hold less actual capital, making them more vulnerable to failure if the protection seller was unable to fulfill its part of the bargain. Regulators became aware of the potential problems surrounding some of these CDSs as early as 2004, but did not take any action. Further, in 2004 the Securities and Exchange Commission (SEC) ruled that the five largest investment banks (Morgan Stanley, Merrill Lynch, Lehman Brothers, Bear Stearns, and Goldman Sachs) would be permitted to use their own internally created risk models to determine the levels of capital required rather than some government-mandated amount: in other words, these institutions became—to some extent—the judge of how much capital they needed to hold to insure their safety. Although the SEC announced its determination to hire more staff and have regular meetings with the investment banks to monitor the situation, capital ratios declined at all five investment banks following the ruling.

Another criticism of the regulators is that they did not impose more transparency on the derivatives market. That is, because these securities were so complex and were not traded on securities exchanges, it was easy for clients to misunderstand—or be misled— about the risky nature of these investments. Such allegations were made in connection with the derivatives transactions at the heart of the bankruptcy of Orange County, California, and in lawsuits brought against Bankers Trust by Gibson Greetings and Proctor and Gamble in the mid-1990s. Efforts to increase disclosure initiated by the Commodities Future Trading Commission (CFTC) met with opposition from the Federal Reserve, Treasury, and Congress; the Commodity Futures Modernization Act of 2000 exempted these derivatives from government supervision.

*

The subprime crisis has indeed been the longest, deepest, and broadest—in short, the worst—financial crisis since the Great Depression. Although the severity of the crisis was extraordinary, its origins were not: the boom-bust pattern of financial crises has been repeated again and again during the last 200 years.

When J. P. Morgan was asked about what the stock market would do, he famously replied, “It will fluctuate.” And, indeed, that is what most markets—be they markets for securities, currency, commodities, or real estate—do, rising and falling in an unpredictable manner. When the value of an asset, or a class of assets, continually rises for a prolonged period of time, investors often come to believe that increases will continue indefinitely: fear is banished and greed becomes the watchword. Once the belief that market prices can only go up is established, it makes good sense to borrow money to invest in that market—after all, the loan can be repaid with the proceeds from sale of the appreciated asset. The more firmly entrenched the belief that the asset will continue to rise, the greater the lengths reasonable people will go to take advantage of the seemingly free lunch.

The housing boom, fueled by ideologically driven expansionary fiscal and monetary policies, encouraged the development of new and increasingly risky ways of taking advantage of its apparently limitless profit possibilities. Mortgage lending, which previously had been confined to those most able to afford it, was made available—also for ideological reasons—to individuals of more precarious means: those with less-solid credit histories, with less money to make a down payment, and with lower incomes. There are sound public policy arguments for promoting home ownership among all sectors of society; however, encouraging the less well off to take on debt that may be beyond what they are able to service—particularly in the event of a decline in the economy and house prices—dramatically increases the risk of a severe crisis. Further, because subprime lending was profitable, financial institutions regularly devised new and increasingly risky means of raising even more money to pour into the housing market. This further inflated the bubble and made its collapse that much more painful. Finally, the supervisory and regulatory apparatuses that were specifically mandated to protect the system utterly failed.

It would be an oversimplification to place the blame for the subprime crisis solely on ill-conceived fiscal and monetary policies. Fingers have been pointed—with good reason—at a host of alternative villains, including Fannie Mae, Freddie Mac, the credit ratings agencies, and the SEC. And, of course, borrowers themselves were not blameless. According to Edmund Andrews:

Nobody duped me, hypnotized me, or lulled me with drugs. Like so many others—borrowers, lenders, and the Wall Street deal makers behind them—I thought I could beat the odds. Everybody had a reason for getting in trouble. The brokers and deal makers were scoring huge commissions. The condo flippers were aiming for quick profits. The ordinary home buyers wanted to own their first houses, or bigger houses, or vacation houses. Some were greedy, some were desperate, and some were deceived.

Nonetheless, the bulk of the blame for the crisis must be assigned to the Bush administration’s fiscal policy and the Greenspan Fed’s monetary policy. Why? Quite simply: fear and greed. The economic and speculative boom launched by the fiscal and monetary policies of the early 2000s raised the returns to greed—that is, the incentive to take on additional risk—to extraordinary levels. No matter how fear-inspiring the regulation and supervision was—and it was not—it would have been overwhelmed by dangerously high levels of greed.

Reprinted from “WRONG: Nine Economic Policy Disasters and What We Can Learn From Them” by Richard S. Grossman with permission from Oxford University Press. Copyright © 2013 by Richard S. Grossman

Shares