Drug development is expensive, time consuming, and risky. A typical new drug costs billions of dollars to develop and requires more than ten years of work — yet only about 0.02% of the drugs in development ever making it to market.

Some claim that AI, or artificial intelligence, will revolutionize drug development by ushering in much shorter development times and drastically lower costs. Many scientists and business consultants are especially optimistic about AI's ability to predict the shapes of nearly every known protein using DeepMind's AlphaFold, an artificial intelligence tool developed by Google parent company Alphabet; predicting this information with great detail would be key to quickly developing drugs. As one AI company boasts, "We … firmly believe that AI has the potential to transform the drug discovery process to achieve time and cost efficiencies."

That kind of rhetoric might be dismissed as typical fake-it-til-you-make-it puffery. But the Washington Post, a presumably neutral observer, has promoted this narrative, too. "New research on proteins promises drug breakthroughs, and much else," said one op-ed that they ran. The New York Times concurred in a similar article titled "We Need to Talk About How Good A.I. Is Getting." One thing is certainly true: "Investors bet on AI start-ups to turbocharge drug development," as the Financial Times reported.

Moderna claims that AI computer simulations helped analyze genetic sequence data in an "optimal way." Never mind that the "optimal" anything is seldom known. In addition, most of the time and effort involved in drug development is not spent in computer simulations, but in clinical trials — a highly uncertain and risky process that isn't made faster or less expensive by artificial intelligence algorithms. A Nature article titled "The lightning-fast quest for COVID vaccines—and what it means for other diseases" doesn't even mention AI among the reasons for the rapid development of COVID-19 vaccines. Instead, it emphasizes that,

The world was able to develop COVID-19 vaccines so quickly because of years of previous research on related viruses and faster ways to manufacture vaccines, enormous funding that allowed firms to run multiple trials in parallel, and regulators moving more quickly than normal. Some of those factors might translate to other vaccine efforts, particularly speedier manufacturing platforms.

Determined to get a COVID-19 vaccine to the public before the November 3, 2020, presidential election, the U.S. government devoted $14 billion to support the pharmaceutical companies' vaccine efforts. The government agreed to pay Pfizer $5.87 billion for 300 million doses if Pfizer developed an FDA-approved vaccine — regardless of whether the vaccine was still needed. Moderna was given $954 million for research and development and a guaranteed $4.94 billion federal purchase of 300 million doses. Johnson & Johnson was given $456 million for research and development and promised $1 billion for 100 million doses.

Want more health and science stories in your inbox? Subscribe to Salon's weekly newsletter The Vulgar Scientist.

The relative unimportance of AI in COVID development is consistent with the conclusion of many scientists that AI is not about to revolutionize drug development. The biggest problem is that clinical trials are the longest and typically most expensive part of the process, and AI cannot replace actual trials. Even AI's impact on drug discovery may be limited. A Science op-ed recently argued that, "[AI] doesn't make as much difference to drug discovery as many stories and press releases have had it…. Protein structure prediction is a hard problem, but even harder ones remain."

The data deluge has made the number of promising-but-coincidental patterns waiting to be discovered far, far larger than the number of useful relationships — which means that the probability that a discovered pattern is truly useful is very close to zero.

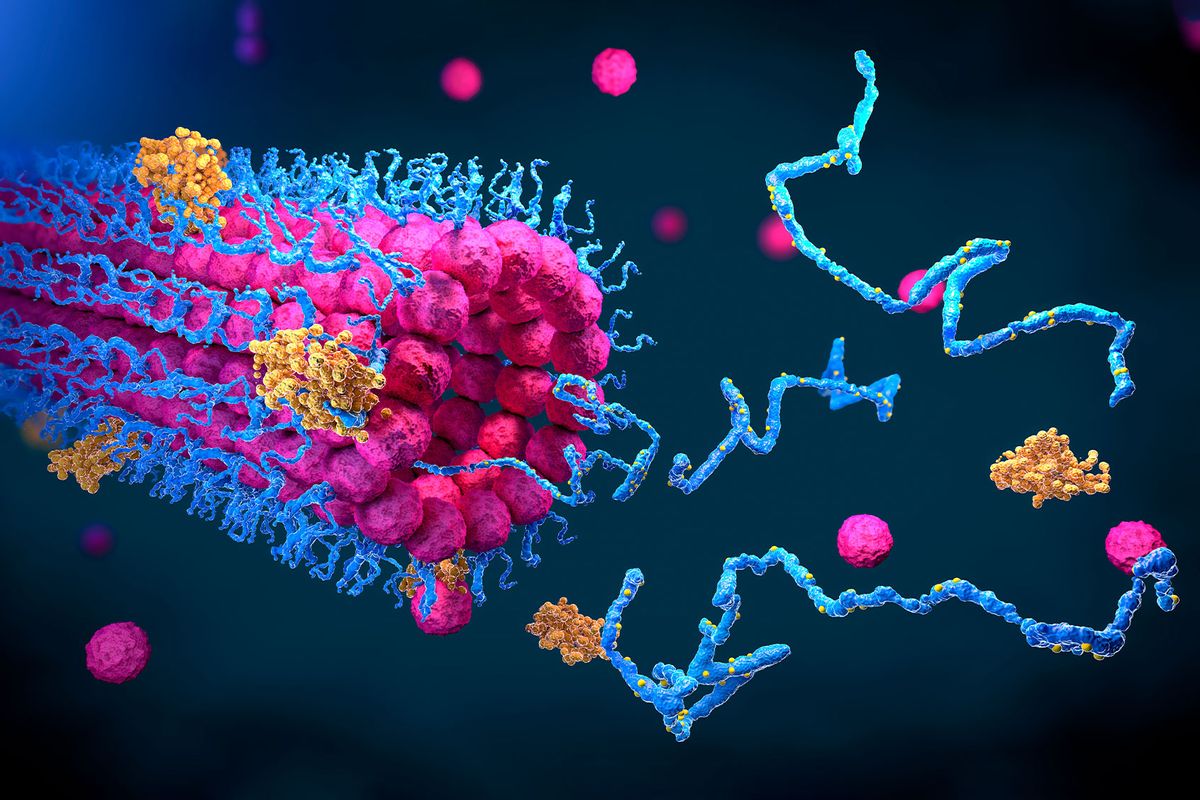

An often crucial part of drug development is to determine whether a drug binds to the candidate protein, something that MIT researchers have shown that AlphaFold cannot do and DeepMind admits AlphaFold can't do: "Predicting drug binding is probably one of the most difficult tasks in biology: these are many-atom interactions between complex molecules with many potential conformations, and the aim of docking is to pinpoint just one of them."

Likewise, the CEO of drug company Verseon is deeply skeptical:

People are saying, "AI will solve everything." They give you fancy words. "We'll ingest all of this longitudinal data and we'll do latitudinal analysis." It's all garbage. It's just hype.

One huge hurdle for all AI data mining algorithms is that the data deluge has made the number of promising-but-coincidental patterns waiting to be discovered far, far larger than the number of useful relationships — which means that the probability that a discovered pattern is truly useful is very close to zero. Verseon's CEO says that the total number of possible chemical compounds in the universe is on the order of 10 to the 33rd power. Companies cannot do clinical trials on every possible compound, nor can they rely on AI to find needles in this enormous haystack.

It will take real intelligence — not artificial intelligence — to determine which compounds are most likely to generate payoffs that justify the enormous costs of testing. Likewise, it will take real intelligence — not artificial intelligence — to conduct the clinical trials needed to gauge the efficacy and possible side effects.

Shares