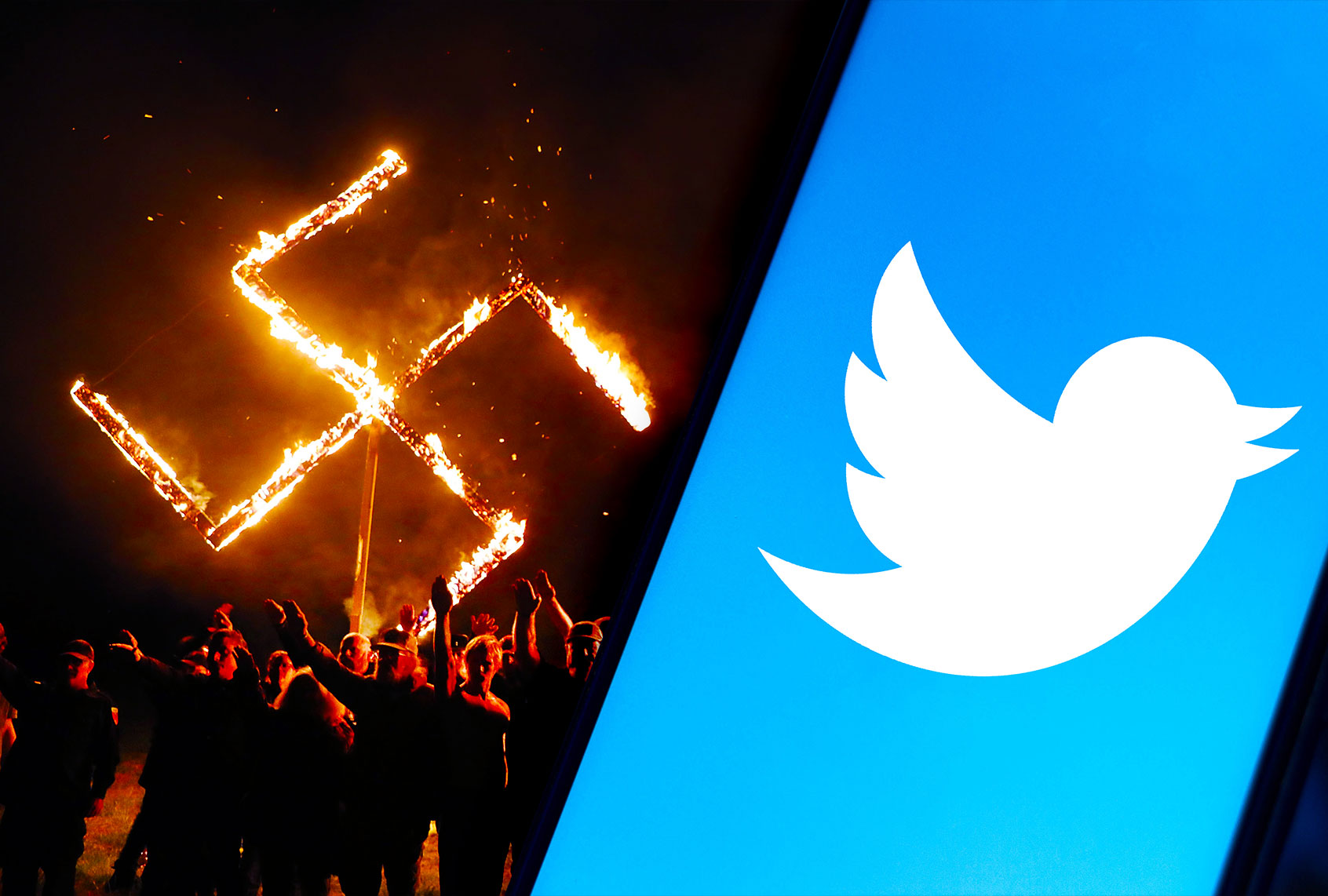

Whenever a far right personality is banned from social media — or even considered as a possible target for such a ban — conservatives frequently respond that being deplatformed only makes them stronger.

In some cases, as with Trump, the results of this deplatforming can be mixed. A recent New York Times study found that Trump’s dual bans from both Facebook and Twitter have reduced the spread of his Big Lie on both platforms. At the same time, his allies have still managed to keep his larger political brand alive on those sites by spreading his messages to their millions of followers.

When it comes to other right-wing misinformation peddlers, a new study in the journal Proceedings of the ACM on Human-Computer Interaction found that deplatforming can be quite effective in curtailing both malicious actors and their narratives. The researchers learned this after studying the Twitter fortunes of three influential far right personalities — Owen Benjamin, Alex Jones and Milo Yiannopoulos — after they were kicked off the site.

“Analyzing the bans of 3 extremist influencers with thousands of followers, we found that banning disrupted the discussions about influencers: posts referencing each influencer declined significantly,” Shagun Jhaver, the study’s lead author and an assistant professor in the Department of Library and Information Science at Rutgers University-New Brunswick, told Salon by email. “More importantly, our study showed that deplatforming helped reduce the spread of many anti-social ideas and conspiracy theories popularized by influencers. Further, we found that banning significantly reduced the overall posting activity and toxicity levels of thousands of supporters for each influencer.”

Want more health and science stories in your inbox? Subscribe to Salon’s weekly newsletter The Vulgar Scientist.

The researchers looked at more than 49 million tweets for their study. In the process, they found that not only did removing the bad actors reduce the overall activity of their supporters, it also reduced their toxicity. Posts that referenced the accounts in question declined by more than 91 percent as a result of their deplatforming, while the number of users tweeting about them fell by roughly 90 percent. This is welcome news that speaks to the effectiveness of some of Twitter’s anti-misinformation and anti-hate strategies.

“Some features introduced by Twitter, such as tagging tweets containing misinformation and providing users the ability to block tweets containing offensive keywords have been helpful in keeping off inappropriate content,” Jhaver explained. At the same time, this is not automatically transferrable to other platforms, given that Twitter’s moderation has a more centralized structure “especially when compared to multi-community platforms like Reddit and Facebook Groups that support localized rule-creation and moderation.”

Jhaver also addressed the Facebook Papers, a trove of leaked documents which, among other things, confirm previous reports that Facebook has deliberately taken a lax approach to right-wing influencers on their site.

“We are still processing all that’s coming out of the Facebook Papers,” Jhaver told Salon. “What’s clear is that Facebook has treated influencers, especially political figures, differently than its regular users, e.g., it has avoided sanctioning influencers even when they violate platform policies. To maintain procedural consistency and transparency, Facebook should dismantle such biases while making its moderation decisions.”

RELATED: Twitter will never truly be rid of Trump — but their game of whac-a-mole is working: Tech experts

A similar lesson applies to the role of social media platforms in avoiding a repeat of the Jan. 6 insurrection.

“When toxic influencers or communities are allowed to remain on the platform, they can gain a critical mass of followers that can be mobilized to inflict severe offline harms,” Jhaver pointed out. “Therefore, platforms like Twitter should be more proactive in identifying and sanctioning toxic influencers.”

Despite its progress, Twitter and other social media companies still have a long road ahead. An Anti-Defamation League report earlier this month revealed that pro-QAnon, anti-vaccine, anti-Semitic and other radical far right content from sites like Gab continue to make their way to Twitter — and don’t appear to be stopping anytime soon.