When a pair of climate change-deniers spread lies about climate scientist Michael Mann, he sued his attackers and actually won, after a jury awarded him a $1 million settlement in February.

The Competitive Enterprise Institute's Rand Simberg and the National Review's Mark Steyn wrote a pair of blog posts about Mann in 2012 in which they made two defamatory claims: First they falsely accused Mann of manipulating data in his famous "hockey stick graph" depicting the rapid increase in Earth's temperature due to burning fossil fuels, and then they baselessly compared Mann to notorious child molester Jerry Sandusky, writing that he “molested and tortured data” and was “the Jerry Sandusky of climate science."

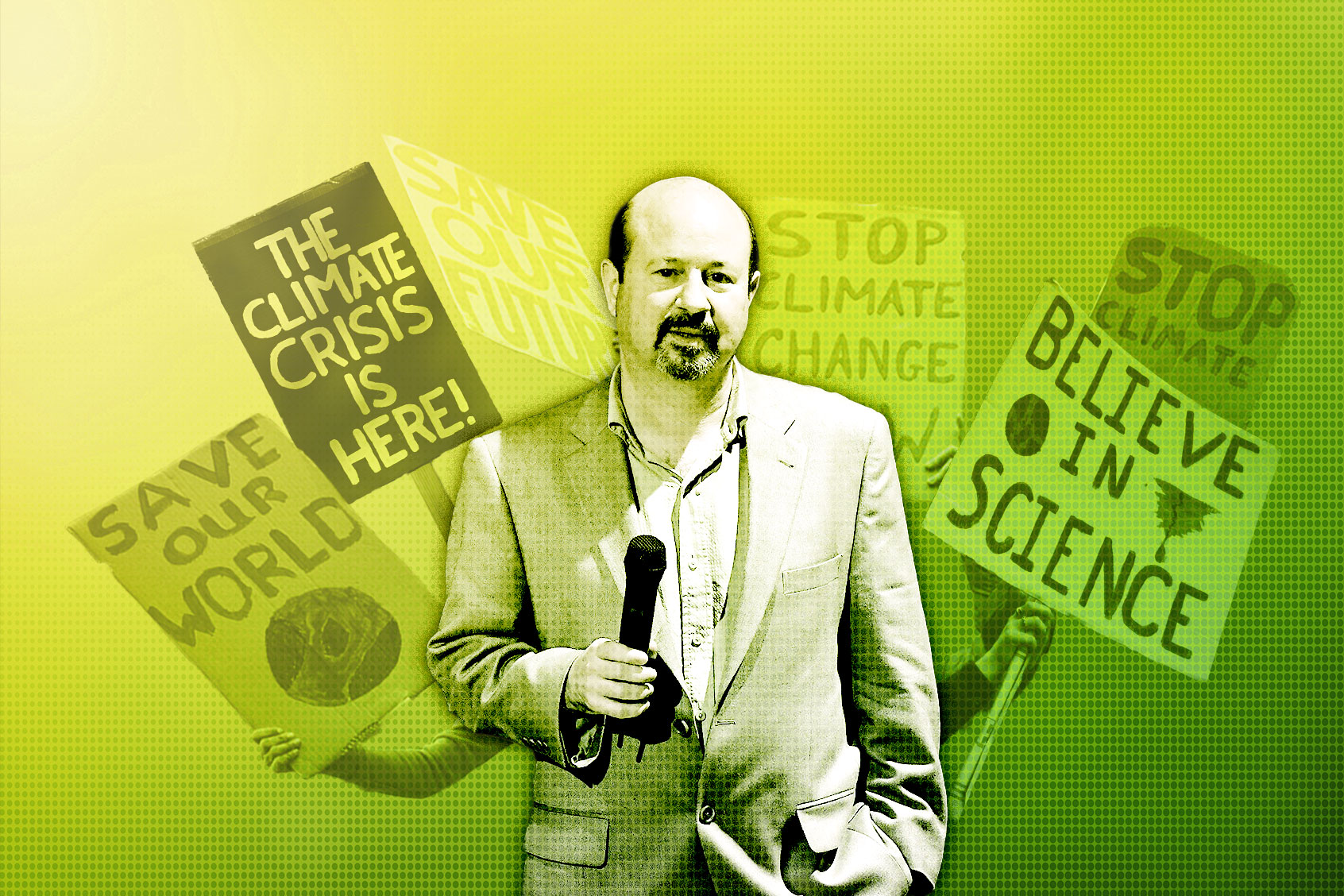

Now Mann is using his experience to urge others to stand up to spreaders of climate change disinformation. At a time when fossil fuel corporations use pseudo-science to dupe millions, deny their culpability in exacerbating this crisis and even work alongside government officials to squash protests, it is increasingly difficult to oppose big polluters without fear of being drowned out, targeted for abuse or both.

In an interview with Salon, Mann opened up on the lessons that activists can learn from his ordeal; on the important role that the United States will need to play in providing effective world leadership on climate change; and on how climate change disinformation and misinformation is being spread more ruthlessly than ever thanks to under-regulated social media platform like X (formerly known as Twitter) and Meta's Instagram and Facebook, as well as the emergence of artificial intelligence (AI) that threatens to supercharge the information wars.

This interview has been lightly edited for length and clarity.

We are at a critical point in terms of climate change and things are arguably spiraling out of control. It is hard enough for one country to deal with this. How are we realistically supposed to deal with it on a global scale?

"Historically, when the U.S. has led on this issue, you have seen other countries come to the table and rise to the challenge."

Obviously, you have to deal with it separately in each country because politics occur at that scale. Yet one could argue that American leadership is particularly important. We've seen that historically, when the U.S. has led on this issue, you have seen other countries come to the table and rise to the challenge. And that's what makes, for example, this next election so critical, because with one of the two choices we would see essentially not only the disappearance of American leadership on climate, but an agenda that seeks to in fact revoke previous treaty agreements and eliminate entire divisions within the government that exist to address matters of climate and energy policy. The U.S. is the world's largest cumulative carbon polluter and has an especially important role because of that in demonstrating leadership on this issue.

There is sort of a domino effect. If the U.S. leads, then historically we've seen other countries, like I said, come to the table and rise to the challenge. Every country has to establish [climate] policy within the framework of its domestic politics. But leadership from major industrial countries is critical to convince countries like India, for example, to make more substantial commitments to decarbonizing their own economies.

What are the lessons from your lawsuit that can apply to other people who are fighting climate change misinformation?

I think it's important to clarify that that case wasn't technically about misinformation, per se. Obviously, people have the right to express their own opinions about climate and the underlying science, and good faith challenges are actually an important part of science. They help science move forward.

But what is not a good faith sort of criticism or engagement is making false, libelous accusations about scientists, or comparing them to convicted criminals. That exceeds the bounds of what is considered honest discourse. That's what this case was really about. The defendants were making false and clearly libelous claims of fraud and exacerbating those false claims by comparisons with convicted criminals.

I think it was an important precedent because it does say that there are limits. Good faith skepticism and disagreement, that's fine. But when you cross the line into defamation — bad faith, ideologically-motivated lies about science and scientists — then you will face the consequences. This does send a larger message to the scientific community that there are bounds, and if you are subject to dishonest and defamatory attacks against you and your findings, politically and ideologically motivated attacks against your science and your scientists, there is recourse.

I hope it creates maybe a little bit more space where scientists feel more comfortable in speaking out, speaking truth to power, speaking publicly about the broader implications of their science, knowing that there are some basic protections in our system and that they do have recourse if they are subject to those sorts of scurrilous attacks.

Want more health and science stories in your inbox? Subscribe to Salon's weekly newsletter Lab Notes.

"Twitter has become a cesspool for the promotion of misinformation and disinformation; Elon Musk is not an honest actor."

I think your story is relevant to more people than just scientists, because there are plenty of us who aren't scientists, but who try to advocate for reforms that will address climate change. We also get personally attacked.

That's right. It does go beyond the narrow sort of category of scientists. It really does get at the issue of how anyone who's subject to bad faith, dishonest, defamatory attacks has always had this recourse. It exists in our system, and this is a reminder that it's there when we need it.

In terms of the larger question of misinformation, how do you think AI fits into the picture, both in terms of pros and cons?

AI is an incredibly useful and important tool in science itself. It allows us to construct far more sophisticated statistical models of processes to find patterns and data to make predictions. There is quite a bit of work going on in my field in climate science about using AI machine learning to, for example, improve climate model predictions and provide more robust descriptions of interrelationships between processes. All of that is useful for understanding and predicting the impacts of climate change. In my field, that's an application of the good things that can be done with it.

You could also extend that to, for example, communication, which is another passion of mine — communicating the science and its implications to the public and policymakers. There are ways where AI and machine learning can be used, for example, to assess how misinformation and disinformation propagates in the public sphere on social media and better understand how to effectively target disinformation and misinformation. And there's work going on in that area, some of it within our own center. So that's the good side.

The bad side, of course, is that any tool can be weaponized by bad actors, and we're seeing that undoubtedly right now in the climate arena. We see that on social media, where for a number of years we've seen bot armies used to pollute the social media discourse on climate change and environmental sustainability. And AI and machine learning provides more sophisticated tools for bad actors who can design even more effective, more convincing, more human-like armies of bots whose role again is to infect our public discourse and to create confusion and disagreement and dissension in such a way as to impede any meaningful policy progress on climate.

We've seen that going on for years. We know bad actors like Russia and Saudi Arabia are using online tools to do this, and AI just gives them far more potent weaponry with which to continue that assault on our public discourse, particularly when it comes to misinformation and disinformation. Now we have to worry about whether images are trustworthy. We have to worry about whether videos are trustworthy, something that we used to take at face value. There is audio-visual evidence of something, we're used to sort of taking that as fact.

But now with machine-learning AI techniques, there is the ability to create fake videos where you're attributing words to people that they never spoke, or actions to people that they never took. All of that just provides an even larger arsenal for the bad actors who are out there looking to pollute our public discourse and looking to, again, impede any meaningful global policy progress in dealing with the climate crisis.

We need your help to stay independent

What role do you think social media companies like Meta and X should play in curating climate change content?

Great question. Frankly I think that some of these companies need to be much more strongly regulated. I think they've shown that they're not willing to be responsible on their own. Elon Musk obviously bought Twitter with the intent to destroy it, to weaponize it for bad actors who helped fund his takeover, which includes Saudi Arabia and Russia. Twitter has become a cesspool for the promotion of misinformation and disinformation; Elon Musk is not an honest actor. By some measures, he has engaged in criminal behavior, and I think it's pretty clear that he has to be reined in and we are going to need much tougher regulatory policies to deal with companies like Twitter and Facebook, that are effectively monopolies now.

There is no viable substitute for them. They have used anti-competitive practices to prevent any meaningful competition in the spaces that they occupy in the social media world. They have to be regulated like monopolies. We need a far stricter FCC-equivalent governmental body that can regulate the behavior of these monopolies now that are playing a profound role, far more profound than the television broadcast networks which are regulated, in our public discourse. So the long and short of it is that we can't expect them to play nice. They've shown no willingness to do that. We have no reason to expect them to change in that regard. They need to be reined in and they need to be reined in soon because our process of democratic governance itself is now threatened directly by companies like Elon Musk's Twitter.